《计算机应用》唯一官方网站 ›› 2023, Vol. 43 ›› Issue (11): 3428-3435.DOI: 10.11772/j.issn.1001-9081.2022111677

• 人工智能 • 上一篇

收稿日期:2022-11-11

修回日期:2023-04-06

接受日期:2023-04-11

发布日期:2023-05-08

出版日期:2023-11-10

通讯作者:

范纯龙

作者简介:张济慈(1998—),女,辽宁海城人,硕士研究生,CCF会员,主要研究方向:深度学习、对抗攻击基金资助:

Jici ZHANG, Chunlong FAN( ), Cailong LI, Xuedong ZHENG

), Cailong LI, Xuedong ZHENG

Received:2022-11-11

Revised:2023-04-06

Accepted:2023-04-11

Online:2023-05-08

Published:2023-11-10

Contact:

Chunlong FAN

About author:ZHANG Jici, born in 1998, M. S. candidate. Her research interests include deep learning, adversarial attack.Supported by:摘要:

对抗攻击通过在神经网络模型的输入样本上添加经设计的扰动,使模型高置信度地输出错误结果。对抗攻击研究主要针对单一模型应用场景,对多模型的攻击主要通过跨模型迁移攻击来实现,而关于跨模型通用攻击方法的研究很少。通过分析多模型攻击扰动的几何关系,明确了不同模型间对抗方向的正交性和对抗方向与决策边界间的正交性,并据此设计了跨模型通用攻击算法和相应的优化策略。在CIFAR10、SVHN数据集和六种常见神经网络模型上,对所提算法进行了多角度的跨模型对抗攻击验证。实验结果表明,给定实验场景下的算法攻击成功率为1.0,二范数模长不大于0.9,相较于跨模型迁移攻击,所提算法在六种模型上的平均攻击成功率最多提高57%,并且具有更好的通用性。

中图分类号:

张济慈, 范纯龙, 李彩龙, 郑学东. 基于几何关系的跨模型通用扰动生成方法[J]. 计算机应用, 2023, 43(11): 3428-3435.

Jici ZHANG, Chunlong FAN, Cailong LI, Xuedong ZHENG. Cross-model universal perturbation generation method based on geometric relationship[J]. Journal of Computer Applications, 2023, 43(11): 3428-3435.

| 模型类别 | 同种训练方式下的不同模型 | ||||

|---|---|---|---|---|---|

| Mode1 | Mode2 | Mode3 | Mode4 | ||

| 不同训练方式下的同种模型 | MDN | DenseNet1 | DenseNet2 | DenseNet3 | DenseNet4 |

| MGN | GoogleNet1 | GoogleNet2 | GoogleNet3 | GoogleNet4 | |

| MMN | MobileNet1 | MobileNet2 | MobileNet3 | MobileNet4 | |

| MNN | NiN1 | NiN2 | NiN3 | NiN4 | |

| MVGG | VGG1 | VGG2 | VGG3 | VGG4 | |

| MRN | ResNet1 | ResNet2 | ResNet3 | ResNet4 | |

表1 模型训练方式

Tab. 1 Model training methods

| 模型类别 | 同种训练方式下的不同模型 | ||||

|---|---|---|---|---|---|

| Mode1 | Mode2 | Mode3 | Mode4 | ||

| 不同训练方式下的同种模型 | MDN | DenseNet1 | DenseNet2 | DenseNet3 | DenseNet4 |

| MGN | GoogleNet1 | GoogleNet2 | GoogleNet3 | GoogleNet4 | |

| MMN | MobileNet1 | MobileNet2 | MobileNet3 | MobileNet4 | |

| MNN | NiN1 | NiN2 | NiN3 | NiN4 | |

| MVGG | VGG1 | VGG2 | VGG3 | VGG4 | |

| MRN | ResNet1 | ResNet2 | ResNet3 | ResNet4 | |

| 数据集 | 跨模型类别 | ηcross | SSIM | PSNR/dB | 总迭代次数 | |

|---|---|---|---|---|---|---|

| CIFAR10 | MDN | 1.0 | 0.470 | 0.994 | 43.962 | 1 540 |

| MGN | 1.0 | 0.347 | 0.997 | 46.452 | 1 376 | |

| MMN | 1.0 | 0.308 | 0.997 | 47.711 | 1 707 | |

| MNN | 1.0 | 0.413 | 0.995 | 45.037 | 1 688 | |

| MVGG | 1.0 | 0.451 | 0.994 | 44.154 | 1 498 | |

| MRN | 1.0 | 0.472 | 0.994 | 43.750 | 1 487 | |

| SVHN | MDN | 1.0 | 0.877 | 0.972 | 38.120 | 1 649 |

| MGN | 1.0 | 0.765 | 0.978 | 39.263 | 1 465 | |

| MMN | 1.0 | 0.687 | 0.982 | 40.246 | 2 218 | |

| MNN | 1.0 | 0.596 | 0.985 | 41.549 | 2 025 | |

| MVGG | 1.0 | 0.632 | 0.983 | 41.131 | 1 291 | |

| MRN | 1.0 | 0.670 | 0.981 | 40.714 | 1 343 |

表2 算法2在不同种训练方式下的同种模型间跨模型攻击性能

Tab. 2 Cross-model attack performance of algorithm 2 across same model under different training methods

| 数据集 | 跨模型类别 | ηcross | SSIM | PSNR/dB | 总迭代次数 | |

|---|---|---|---|---|---|---|

| CIFAR10 | MDN | 1.0 | 0.470 | 0.994 | 43.962 | 1 540 |

| MGN | 1.0 | 0.347 | 0.997 | 46.452 | 1 376 | |

| MMN | 1.0 | 0.308 | 0.997 | 47.711 | 1 707 | |

| MNN | 1.0 | 0.413 | 0.995 | 45.037 | 1 688 | |

| MVGG | 1.0 | 0.451 | 0.994 | 44.154 | 1 498 | |

| MRN | 1.0 | 0.472 | 0.994 | 43.750 | 1 487 | |

| SVHN | MDN | 1.0 | 0.877 | 0.972 | 38.120 | 1 649 |

| MGN | 1.0 | 0.765 | 0.978 | 39.263 | 1 465 | |

| MMN | 1.0 | 0.687 | 0.982 | 40.246 | 2 218 | |

| MNN | 1.0 | 0.596 | 0.985 | 41.549 | 2 025 | |

| MVGG | 1.0 | 0.632 | 0.983 | 41.131 | 1 291 | |

| MRN | 1.0 | 0.670 | 0.981 | 40.714 | 1 343 |

| 数据集 | 跨模型 类别 | ηcross | SSIM | PSNR/dB | 总迭代 次数 | |

|---|---|---|---|---|---|---|

| CIFAR10 | Mode1 | 1.0 | 0.500 | 0.993 | 43.325 | 1 741 |

| Mode2 | 1.0 | 0.483 | 0.993 | 43.555 | 1 767 | |

| Mode3 | 1.0 | 0.576 | 0.991 | 41.971 | 1 896 | |

| Mode4 | 1.0 | 0.562 | 0.992 | 42.246 | 1 872 | |

| SVHN | Mode1 | 1.0 | 0.881 | 0.971 | 38.037 | 1 642 |

| Mode2 | 1.0 | 0.886 | 0.971 | 37.932 | 1 600 | |

| Mode3 | 1.0 | 0.895 | 0.970 | 37.776 | 1 718 | |

| Mode4 | 1.0 | 0.896 | 0.971 | 37.787 | 1 624 |

表3 算法2在同种训练方式下的不同模型间跨模型攻击性能

Tab. 3 Cross-model attack performance of algorithm 2 across different models under same training method

| 数据集 | 跨模型 类别 | ηcross | SSIM | PSNR/dB | 总迭代 次数 | |

|---|---|---|---|---|---|---|

| CIFAR10 | Mode1 | 1.0 | 0.500 | 0.993 | 43.325 | 1 741 |

| Mode2 | 1.0 | 0.483 | 0.993 | 43.555 | 1 767 | |

| Mode3 | 1.0 | 0.576 | 0.991 | 41.971 | 1 896 | |

| Mode4 | 1.0 | 0.562 | 0.992 | 42.246 | 1 872 | |

| SVHN | Mode1 | 1.0 | 0.881 | 0.971 | 38.037 | 1 642 |

| Mode2 | 1.0 | 0.886 | 0.971 | 37.932 | 1 600 | |

| Mode3 | 1.0 | 0.895 | 0.970 | 37.776 | 1 718 | |

| Mode4 | 1.0 | 0.896 | 0.971 | 37.787 | 1 624 |

| 数据集 | 跨模型类别 | ηcross | SSIM | PSNR/dB | |

|---|---|---|---|---|---|

| CIFAR10 | MDN | 1.0 | 0.407 | 0.995 | 45.322 |

| MGN | 1.0 | 0.288 | 0.998 | 48.057 | |

| MMN | 1.0 | 0.282 | 0.998 | 48.524 | |

| MNN | 1.0 | 0.378 | 0.996 | 45.850 | |

| MVGG | 1.0 | 0.379 | 0.996 | 45.684 | |

| MRN | 1.0 | 0.388 | 0.996 | 45.410 | |

| SVHN | MDN | 1.0 | 0.776 | 0.977 | 39.559 |

| MGN | 1.0 | 0.671 | 0.983 | 40.715 | |

| MMN | 1.0 | 0.638 | 0.984 | 41.173 | |

| MNN | 1.0 | 0.553 | 0.987 | 42.451 | |

| MVGG | 1.0 | 0.537 | 0.987 | 42.701 | |

| MRN | 1.0 | 0.567 | 0.986 | 42.424 |

表4 模长优化在不同种训练方式下的同种模型间的跨模型攻击性能

Tab. 4 Cross-model attack performance of algorithms across same model under different training methods with L2 norm optimization

| 数据集 | 跨模型类别 | ηcross | SSIM | PSNR/dB | |

|---|---|---|---|---|---|

| CIFAR10 | MDN | 1.0 | 0.407 | 0.995 | 45.322 |

| MGN | 1.0 | 0.288 | 0.998 | 48.057 | |

| MMN | 1.0 | 0.282 | 0.998 | 48.524 | |

| MNN | 1.0 | 0.378 | 0.996 | 45.850 | |

| MVGG | 1.0 | 0.379 | 0.996 | 45.684 | |

| MRN | 1.0 | 0.388 | 0.996 | 45.410 | |

| SVHN | MDN | 1.0 | 0.776 | 0.977 | 39.559 |

| MGN | 1.0 | 0.671 | 0.983 | 40.715 | |

| MMN | 1.0 | 0.638 | 0.984 | 41.173 | |

| MNN | 1.0 | 0.553 | 0.987 | 42.451 | |

| MVGG | 1.0 | 0.537 | 0.987 | 42.701 | |

| MRN | 1.0 | 0.567 | 0.986 | 42.424 |

| 数据集 | 跨模型类别 | ηcross | SSIM | PSNR/dB | |

|---|---|---|---|---|---|

| CIFAR10 | Mode1 | 1.0 | 0.444 | 0.994 | 44.335 |

| Mode2 | 1.0 | 0.430 | 0.995 | 44.597 | |

| Mode3 | 1.0 | 0.516 | 0.993 | 42.927 | |

| Mode4 | 1.0 | 0.505 | 0.993 | 43.204 | |

| SVHN | Mode1 | 1.0 | 0.800 | 0.975 | 39.094 |

| Mode2 | 1.0 | 0.802 | 0.976 | 39.014 | |

| Mode3 | 1.0 | 0.810 | 0.975 | 38.835 | |

| Mode4 | 1.0 | 0.815 | 0.975 | 38.834 |

表5 模长优化在同种训练方式下的不同模型间的跨模型攻击性能

Tab. 5 Cross-model attack performance of algorithms across different models under same training method with L2 norm optimization

| 数据集 | 跨模型类别 | ηcross | SSIM | PSNR/dB | |

|---|---|---|---|---|---|

| CIFAR10 | Mode1 | 1.0 | 0.444 | 0.994 | 44.335 |

| Mode2 | 1.0 | 0.430 | 0.995 | 44.597 | |

| Mode3 | 1.0 | 0.516 | 0.993 | 42.927 | |

| Mode4 | 1.0 | 0.505 | 0.993 | 43.204 | |

| SVHN | Mode1 | 1.0 | 0.800 | 0.975 | 39.094 |

| Mode2 | 1.0 | 0.802 | 0.976 | 39.014 | |

| Mode3 | 1.0 | 0.810 | 0.975 | 38.835 | |

| Mode4 | 1.0 | 0.815 | 0.975 | 38.834 |

| 源模型 | 对比算法 | 目标模型的成功率 | 平均攻击 成功率 | |||||

|---|---|---|---|---|---|---|---|---|

| DenseNet121 | GoogleNet | MobileNet | NiN | VGG11 | ResNet18 | |||

| DenseNet121 | SINIFGSM | 0.969* | 0.742 | 0.714 | 0.529 | 0.638 | 0.642 | 0.706 |

| VMIFGSM | 0.999* | 0.857 | 0.810 | 0.645 | 0.749 | 0.768 | 0.805 | |

| VNIFGSM | 0.999* | 0.839 | 0.806 | 0.628 | 0.740 | 0.762 | 0.796 | |

| GoogleNet | SINIFGSM | 0.640 | 0.988* | 0.677 | 0.406 | 0.512 | 0.478 | 0.617 |

| VMIFGSM | 0.762 | 1.000* | 0.717 | 0.457 | 0.571 | 0.558 | 0.678 | |

| VNIFGSM | 0.749 | 1.000* | 0.714 | 0.452 | 0.568 | 0.557 | 0.673 | |

| MobileNet | SINIFGSM | 0.546 | 0.591 | 0.992* | 0.377 | 0.486 | 0.515 | 0.585 |

| VMIFGSM | 0.628 | 0.656 | 1.000* | 0.415 | 0.543 | 0.584 | 0.638 | |

| VNIFGSM | 0.624 | 0.669 | 1.000* | 0.442 | 0.546 | 0.573 | 0.642 | |

| NiN | SINIFGSM | 0.471 | 0.445 | 0.496 | 0.907* | 0.473 | 0.422 | 0.536 |

| VMIFGSM | 0.585 | 0.545 | 0.550 | 0.973* | 0.563 | 0.525 | 0.624 | |

| VNIFGSM | 0.584 | 0.555 | 0.553 | 0.971* | 0.572 | 0.548 | 0.631 | |

| VGG11 | SINIFGSM | 0.688 | 0.647 | 0.676 | 0.545 | 0.989* | 0.659 | 0.701 |

| VMIFGSM | 0.793 | 0.742 | 0.769 | 0.654 | 0.996* | 0.772 | 0.788 | |

| VNIFGSM | 0.778 | 0.727 | 0.749 | 0.632 | 0.996* | 0.761 | 0.774 | |

| ResNet18 | SINIFGSM | 0.638 | 0.568 | 0.658 | 0.471 | 0.661 | 0.980* | 0.663 |

| VMIFGSM | 0.775 | 0.699 | 0.764 | 0.577 | 0.752 | 0.994* | 0.756 | |

| VNIFGSM | 0.765 | 0.680 | 0.762 | 0.581 | 0.741 | 0.997* | 0.754 | |

表6 对比算法在CIFAR10数据集和六种常见模型上的攻击成功率

Tab. 6 Attack success rates of comparison algorithms on CIFAR10 dataset and six common models

| 源模型 | 对比算法 | 目标模型的成功率 | 平均攻击 成功率 | |||||

|---|---|---|---|---|---|---|---|---|

| DenseNet121 | GoogleNet | MobileNet | NiN | VGG11 | ResNet18 | |||

| DenseNet121 | SINIFGSM | 0.969* | 0.742 | 0.714 | 0.529 | 0.638 | 0.642 | 0.706 |

| VMIFGSM | 0.999* | 0.857 | 0.810 | 0.645 | 0.749 | 0.768 | 0.805 | |

| VNIFGSM | 0.999* | 0.839 | 0.806 | 0.628 | 0.740 | 0.762 | 0.796 | |

| GoogleNet | SINIFGSM | 0.640 | 0.988* | 0.677 | 0.406 | 0.512 | 0.478 | 0.617 |

| VMIFGSM | 0.762 | 1.000* | 0.717 | 0.457 | 0.571 | 0.558 | 0.678 | |

| VNIFGSM | 0.749 | 1.000* | 0.714 | 0.452 | 0.568 | 0.557 | 0.673 | |

| MobileNet | SINIFGSM | 0.546 | 0.591 | 0.992* | 0.377 | 0.486 | 0.515 | 0.585 |

| VMIFGSM | 0.628 | 0.656 | 1.000* | 0.415 | 0.543 | 0.584 | 0.638 | |

| VNIFGSM | 0.624 | 0.669 | 1.000* | 0.442 | 0.546 | 0.573 | 0.642 | |

| NiN | SINIFGSM | 0.471 | 0.445 | 0.496 | 0.907* | 0.473 | 0.422 | 0.536 |

| VMIFGSM | 0.585 | 0.545 | 0.550 | 0.973* | 0.563 | 0.525 | 0.624 | |

| VNIFGSM | 0.584 | 0.555 | 0.553 | 0.971* | 0.572 | 0.548 | 0.631 | |

| VGG11 | SINIFGSM | 0.688 | 0.647 | 0.676 | 0.545 | 0.989* | 0.659 | 0.701 |

| VMIFGSM | 0.793 | 0.742 | 0.769 | 0.654 | 0.996* | 0.772 | 0.788 | |

| VNIFGSM | 0.778 | 0.727 | 0.749 | 0.632 | 0.996* | 0.761 | 0.774 | |

| ResNet18 | SINIFGSM | 0.638 | 0.568 | 0.658 | 0.471 | 0.661 | 0.980* | 0.663 |

| VMIFGSM | 0.775 | 0.699 | 0.764 | 0.577 | 0.752 | 0.994* | 0.756 | |

| VNIFGSM | 0.765 | 0.680 | 0.762 | 0.581 | 0.741 | 0.997* | 0.754 | |

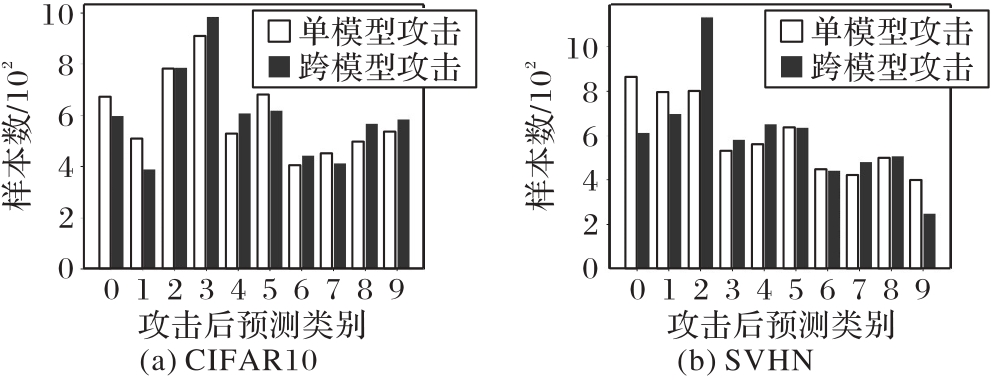

图10 实验数据集上不同训练方式下的单模型攻击与跨不同模型攻击预测结果对比

Fig. 10 Prediction results comparison of single-model attacks and cross-model attacks under different training methods on experimental datasets

| 1 | GOODFELLOW I J, SHLENS J, SZEGEDY C. Explaining and harnessing adversarial examples[EB/OL]. (2015-03-20) [2022-12-16].. |

| 2 | MĄDRY A, MAKELOV A, SCHMIDT L, et al. Towards deep learning models resistant to adversarial attacks[EB/OL]. (2019-09-04) [2022-12-16].. 10.48550/arXiv.1706.06083 |

| 3 | MOOSAVI-DEZFOOLI S M, FAWZI A, FROSSARD P. DeepFool: a simple and accurate method to fool deep neural networks[C]// Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE, 2016: 2574-2582. 10.1109/cvpr.2016.282 |

| 4 | CARLINI N, WAGNER D. Towards evaluating the robustness of neural networks[C]// Proceedings of the 2017 IEEE Symposium on Security and Privacy. Piscataway: IEEE, 2017: 39-57. 10.1109/sp.2017.49 |

| 5 | SU J, VARGAS D V, SAKURAI K. One pixel attack for fooling deep neural networks[J]. IEEE Transactions on Evolutionary Computation, 2019, 23(5): 828-841. 10.1109/tevc.2019.2890858 |

| 6 | CHEN P Y, ZHANG H, SHARMA Y, et al. ZOO: zeroth order optimization based black-box attacks to deep neural networks without training substitute models[C]// Proceedings of the 10th ACM Workshop on Artificial Intelligence and Security. New York: ACM, 2017: 15-26. 10.1145/3128572.3140448 |

| 7 | LI Y, LI L, WANG L, et al. NATTACK: learning the distributions of adversarial examples for an improved black-box attack on deep neural networks[C]// Proceedings of the 36th International Conference on Machine Learning. New York: JMLR.org, 2019: 3866-3876. 10.48550/arXiv.1905.00441 |

| 8 | MOOSAVI-DEZFOOLI S M, FAWZI A, FAWZI O, et al. Universal adversarial perturbations[C]// Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE, 2017: 86-94. 10.1109/cvpr.2017.17 |

| 9 | ZHANG C, BENZ P, IMTIAZ T, et al. CD-UAP: class discriminative universal adversarial perturbation[C]// Proceedings of the 34th AAAI Conference on Artificial Intelligence. Palo Alto, CA: AAAI Press, 2020: 6754-6761. 10.1609/aaai.v34i04.6154 |

| 10 | MOPURI K R, GANESHAN A, BABU R V. Generalizable data-free objective for crafting universal adversarial perturbations[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2019, 41(10): 2452-2465. 10.1109/tpami.2018.2861800 |

| 11 | MOPURI K R, GARG U, BABU R V. Fast feature fool: a data independent approach to universal adversarial perturbations[C]// Proceedings of the 2017 British Machine Vision Conference. Durham: BMVA Press, 2017: No.30. 10.5244/c.31.30 |

| 12 | MOPURI K R, UPPALA P K, BABU R V. Ask, acquire, and attack: data-free UAP generation using class impressions[C]// Proceedings of the 2018 European Conference on Computer Vision, LNCS 11213. Cham: Springer, 2018: 20-35. |

| 13 | WU L, ZHU Z, TAI C, et al. Understanding and enhancing the transferability of adversarial examples[EB/OL]. (2018-02-27) [2022-12-16].. |

| 14 | LI Y, ZHANG Y, ZHANG R, et al. Generative transferable adversarial attack[C]// Proceedings of the 3rd International Conference on Video and Image Processing. New York: ACM, 2019: 84-89. 10.1145/3376067.3376112 |

| 15 | XIE C, ZHANG Z, ZHOU Y, et al. Improving transferability of adversarial examples with input diversity[C]// Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE, 2019: 2725-2734. 10.1109/cvpr.2019.00284 |

| 16 | LIN J, SONG C, HE K, et al. Nesterov accelerated gradient and scale invariance for adversarial attacks[EB/OL]. [2022-12-16].. |

| 17 | WANG G, YAN H, WEI X. Improving adversarial transferability with spatial momentum[EB/OL]. [2022-12-16].. 10.1007/978-3-031-18907-4_46 |

| 18 | WANG X, HE K. Enhancing the transferability of adversarial attacks through variance tuning[C]// Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE, 2021:1924-1933. 10.1109/cvpr46437.2021.00196 |

| 19 | LIU Y, CHEN X, LIU C, et al. Delving into transferable adversarial examples and black-box attacks[EB/OL]. [2022-12-16].. |

| 20 | WASEDA F, NISHIKAWA S, LE T N, et al. Closer look at the transferability of adversarial examples: how they fool different models differently[C]// Proceedings of the 2023 IEEE/CVF Winter Conference on Applications of Computer Vision. Piscataway: IEEE, 2023: 1360-1368. 10.1109/wacv56688.2023.00141 |

| 21 | HE Z, WANG W, XUAN X, et al. A new ensemble method for concessively targeted multi-model attack[EB/OL]. [2022-12-16].. |

| 22 | WU F, GAZO R, HAVIAROVA E, et al. Efficient project gradient descent for ensemble adversarial attack[EB/OL].[2022-12-16].. 10.48550/arXiv.1906.03333 |

| 23 | ILYAS A, SANTURKAR S, TSIPRAS D, et al. Adversarial examples are not bugs, they are features[C]// Proceedings of the 33rd International Conference on Neural Information Processing Systems. Red Hook, NY: Curran Associates Inc., 2019: 125-136. 10.23915/distill.00019 |

| 24 | SHAMIR A, MELAMED O, BenSHMUEL O. The dimpled manifold model of adversarial examples in machine learning[EB/OL]. [2022-12-16].. |

| 25 | KNUTH D E. The Art of Computer Programming: Volume 3, Sorting and Searching[M]. Reading, MA: Addison Wesley, 1973. |

| 26 | KRIZHEVSKY A. Learning multiple layers of features from tiny images[R/OL]. [2022-12-16].. 10.1016/j.tics.2007.09.004 |

| 27 | NETZER Y, WANG T, COATES A, et al. Reading digits in natural images with unsupervised feature learning[EB/OL]. [2022-12-16].. |

| 28 | LIN M, CHEN Q, YAN S. Network in network[EB/OL]. [2022-12-16].. |

| 29 | SIMONYAN K, ZISSERMAN A. Very deep convolutional networks for large-scale image recognition[EB/OL]. [2022-12-16].. |

| 30 | HE K, ZHANG X, REN S, et al. Deep residual learning for image recognition[C]// Proceedings of 2016 IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE, 2016: 770-778. 10.1109/cvpr.2016.90 |

| 31 | HUANG G, LIU Z, L van der MAATEN, et al. Densely connected convolutional networks[C]// Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE, 2017: 2261-2269. 10.1109/cvpr.2017.243 |

| 32 | SZEGEDY C, LIU W, JIA Y, et al. Going deeper with convolutions[C]// Proceedings of 2015 IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE, 2015: 1-9. 10.1109/cvpr.2015.7298594 |

| 33 | HOWARD A G, ZHU M, CHEN B, et al. MobileNets: efficient convolutional neural networks for mobile vision applications[EB/OL]. [2022-12-16].. 10.48550/arXiv.1704.04861 |

| [1] | 张涵钰, 李振波, 李蔚然, 杨普. 基于机器视觉的水产养殖计数研究综述[J]. 《计算机应用》唯一官方网站, 2023, 43(9): 2970-2982. |

| [2] | 陈俊韬, 朱子奇. 基于多尺度特征提取与融合的图像复制-粘贴伪造检测[J]. 《计算机应用》唯一官方网站, 2023, 43(9): 2919-2924. |

| [3] | 何子仪, 杨燕, 张熠玲. 深度融合多视图聚类网络[J]. 《计算机应用》唯一官方网站, 2023, 43(9): 2651-2656. |

| [4] | 李校林, 杨松佳. 基于深度学习的多用户毫米波中继网络混合波束赋形[J]. 《计算机应用》唯一官方网站, 2023, 43(8): 2511-2516. |

| [5] | 郭祥, 姜文刚, 王宇航. 基于改进Inception-ResNet的加密流量分类方法[J]. 《计算机应用》唯一官方网站, 2023, 43(8): 2471-2476. |

| [6] | 崔雨萌, 王靖亚, 刘晓文, 闫尚义, 陶知众. 融合注意力和裁剪机制的通用文本分类模型[J]. 《计算机应用》唯一官方网站, 2023, 43(8): 2396-2405. |

| [7] | 张琨, 杨丰玉, 钟发, 曾广东, 周世健. 基于混合代码表示的源代码脆弱性检测[J]. 《计算机应用》唯一官方网站, 2023, 43(8): 2517-2526. |

| [8] | 王一, 谢杰, 程佳, 豆立伟. 基于深度学习的RGB图像目标位姿估计综述[J]. 《计算机应用》唯一官方网站, 2023, 43(8): 2546-2555. |

| [9] | 梁敏, 刘佳艺, 李杰. 融合迭代反馈与注意力机制的图像超分辨重建方法[J]. 《计算机应用》唯一官方网站, 2023, 43(7): 2280-2287. |

| [10] | 叶坤佩, 熊熙, 丁哲. 基于领域融合和时间权重的招工推荐模型[J]. 《计算机应用》唯一官方网站, 2023, 43(7): 2133-2139. |

| [11] | 郑帅, 张晓龙, 邓鹤, 任宏伟. 基于多尺度特征融合和网格注意力机制的三维肝脏影像分割方法[J]. 《计算机应用》唯一官方网站, 2023, 43(7): 2303-2310. |

| [12] | 拓雨欣, 薛涛. 融合指针网络与关系嵌入的三元组联合抽取模型[J]. 《计算机应用》唯一官方网站, 2023, 43(7): 2116-2124. |

| [13] | 岑黎彬, 李靖东, 林淳波, 王晓玲. 基于深度自回归模型的近似查询处理方法[J]. 《计算机应用》唯一官方网站, 2023, 43(7): 2034-2039. |

| [14] | 秦静, 马雪倩, 高福杰, 季长清, 汪祖民. 基于步态分析的帕金森病辅助诊断方法综述[J]. 《计算机应用》唯一官方网站, 2023, 43(6): 1687-1695. |

| [15] | 陈一驰, 陈斌. 计算机视觉中的终身学习综述[J]. 《计算机应用》唯一官方网站, 2023, 43(6): 1785-1795. |

| 阅读次数 | ||||||

|

全文 |

|

|||||

|

摘要 |

|

|||||