《计算机应用》唯一官方网站 ›› 2022, Vol. 42 ›› Issue (2): 365-374.DOI: 10.11772/j.issn.1001-9081.2021020230

所属专题: 人工智能

李亚鸣1,2, 邢凯1,2( ), 邓洪武1,2, 王志勇1,2, 胡璇1,2

), 邓洪武1,2, 王志勇1,2, 胡璇1,2

收稿日期:2021-02-07

修回日期:2021-03-18

接受日期:2021-03-26

发布日期:2022-02-11

出版日期:2022-02-10

通讯作者:

邢凯

作者简介:李亚鸣(1996—),男,江西赣州人,硕士研究生,主要研究方向:深度学习;

Yaming LI1,2, Kai XING1,2( ), Hongwu DENG1,2, Zhiyong WANG1,2, Xuan HU1,2

), Hongwu DENG1,2, Zhiyong WANG1,2, Xuan HU1,2

Received:2021-02-07

Revised:2021-03-18

Accepted:2021-03-26

Online:2022-02-11

Published:2022-02-10

Contact:

Kai XING

About author:LI Yaming, born in 1996, M. S. candidate. His research interests include deep learning.摘要:

针对卷积结构的深度学习模型在小样本学习场景中泛化性能较差的问题,以AlexNet和ResNet为例,提出一种基于小样本无梯度学习的卷积结构预训练模型的性能优化方法。首先基于因果干预对样本数据进行调制,由非时序数据生成序列数据,并基于协整检验从数据分布平稳性的角度对预训练模型进行定向修剪;然后基于资本资产定价模型(CAPM)以及最优传输理论,在预训练模型中间输出过程中进行无需梯度传播的正向学习并构建一种全新的结构,从而生成在分布空间中具有明确类间区分性的表征向量;最后基于自注意力机制对生成的有效特征进行自适应加权处理,并在全连接层对特征进行聚合,从而生成具有弱相关性的embedding向量。实验结果表明所提出的方法能够使AlexNet和ResNet卷积结构预训练模型在ImageNet 2012数据集的100类图片上的Top-1准确率分别从58.82%、78.51%提升到68.50%、85.72%,可见所提方法能够基于小样本训练数据有效提高卷积结构预训练模型的性能。

中图分类号:

李亚鸣, 邢凯, 邓洪武, 王志勇, 胡璇. 基于小样本无梯度学习的卷积结构预训练模型性能优化方法[J]. 计算机应用, 2022, 42(2): 365-374.

Yaming LI, Kai XING, Hongwu DENG, Zhiyong WANG, Xuan HU. Derivative-free few-shot learning based performance optimization method of pre-trained models with convolution structure[J]. Journal of Computer Applications, 2022, 42(2): 365-374.

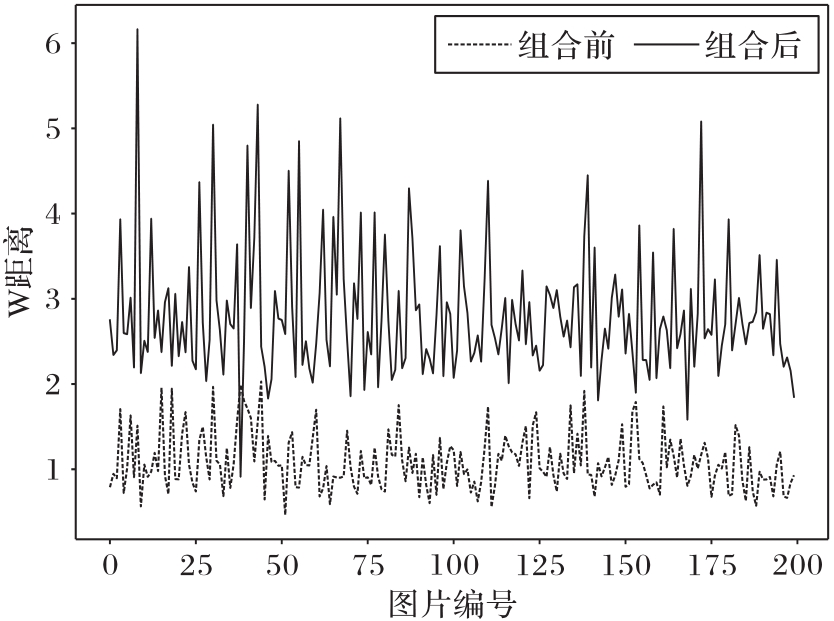

图8 随机选择类别,资本资产定价模型组合优化前后所有采样的收益R分布

Fig. 8 After randomly selecting a class, distribution of income R of all samples before and after combinational optimization of capital asset pricing model

| 网络模型 | 数据集 | Top-1 Acc | Top-5 Acc |

|---|---|---|---|

| AlexNet | ImageNet 2012(100类) | 58.82 | 83.51 |

| CIFAR-100 | 61.29 | 81.34 | |

| AlexNet改进模型 | ImageNet 2012(100类) | 68.50 | 92.25 |

| CIFAR-100 | 69.15 | 89.55 | |

| ResNet50 | ImageNet 2012(100类) | 78.51 | 94.20 |

| ResNet50改进模型 | ImageNet 2012(100类) | 85.72 | 96.65 |

表1 图片分类任务性能比较 ( %)

Tab. 1 Performance comparison of image classification tasks

| 网络模型 | 数据集 | Top-1 Acc | Top-5 Acc |

|---|---|---|---|

| AlexNet | ImageNet 2012(100类) | 58.82 | 83.51 |

| CIFAR-100 | 61.29 | 81.34 | |

| AlexNet改进模型 | ImageNet 2012(100类) | 68.50 | 92.25 |

| CIFAR-100 | 69.15 | 89.55 | |

| ResNet50 | ImageNet 2012(100类) | 78.51 | 94.20 |

| ResNet50改进模型 | ImageNet 2012(100类) | 85.72 | 96.65 |

| 1 | XIE S N, GIRSHICK R, DOLLÁR P, et al. Aggregated residual transformations for deep neural networks [C]// Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE, 2017: 5987-5995. 10.1109/cvpr.2017.634 |

| 2 | HUANG G, LIU Z, MAATEN L VAN DER, et al. Densely connected convolutional networks [C]// Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE, 2017: 2261-2269. 10.1109/cvpr.2017.243 |

| 3 | ZHANG M R, LUCAS J, HINTON G, et al. Lookahead optimizer: k steps forward, 1 step back[C/OL]// Proceedings of the 33rd Conference on Neural Information Processing Systems. [2021-01-22]. . |

| 4 | LILLICRAP T P, SANTORO A, MARRIS L, et al. Backpropagation and the brain[J]. Nature Reviews Neuroscience, 2020, 21(6): 335-346. 10.1038/s41583-020-0277-3 |

| 5 | KHAN A, SOHAIL A, ZAHOORA U, et al. A survey of the recent architectures of deep convolutional neural networks[J]. Artificial Intelligence Review, 2020, 53(8): 5455-5516. 10.1007/s10462-020-09825-6 |

| 6 | KINGMA D P, BA J L. Adam: a method for stochastic optimization[J]. [EB/OL]. (2017-01-30) [2021-01-03]. . |

| 7 | DUCHI J, HAZAN E, SINGER Y. Adaptive sub gradient methods for online learning and stochastic optimization[J]. Journal of Machine Learning Research, 2011, 12: 2121-2159. |

| 8 | FERNÁNDEZ-REDONDO M, HERNÁNDEZ-ESPINOSA C. Weight initialization methods for multilayer feedforward [C]// Proceedings of the 2001 European Symposium on Artificial Neural Networks. [2021-01-22]. . 10.1109/ijcnn.2001.939011 |

| 9 | SANTURKAR S, TSIPRAS D, ILYAS A, et al. How does batch normalization help optimization? [C]// Proceedings of the 32nd International Conference on Neural Information Processing Systems. Red Hook, NY: Curran Associates Inc., 2018: 2488-2498. |

| 10 | HE K M, ZHANG X Y, REN S Q, et al. Deep residual learning for image recognition [C]// Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE, 2016: 770-778. 10.1109/cvpr.2016.90 |

| 11 | BACHLECHNER T, MAJUMDER B P, MAO H H, et al. ReZero is all you need: fast convergence at large depth[EB/OL]. (2020-06-25) [2021-02-05]. . |

| 12 | RAMACHANDRAN P, ZOPH B, LE Q V. Searching for activation functions[EB/OL]. (2017-10-27) [2021-02-05]. . |

| 13 | MISRA D, LANDSKAPE. Mish: a self-regularized non-monotonic neural activation function [C]// Proceedings of the 2020 British Machine Vision Conference. Durham: BMVA Press, 2020: No.928. |

| 14 | GAO Z T, WANG L M, WU G S. LIP: local importance-based pooling [C]// Proceedings of the 2019 IEEE/CVF Conference on Computer. Piscataway: IEEE. 2019: 3354-3363. 10.1109/iccv.2019.00345 |

| 15 | GIRSHICK R. Fast R-CNN [C]// Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE, 2015: 1440-1448. 10.1109/iccv.2015.169 |

| 16 | WANG Z N, XIANG C Q, ZOU W B, et al. MMA regularization: decorrelating weights of neural networks by maximizing the minimal angles[C/OL]// Proceedings of the 34th Conference on Neural Information Processing Systems. [2021-01-22]. . 10.1109/lsp.2020.3037512 |

| 17 | JENSEN M C, BLACK F, SCHOLES M S. The capital asset pricing model: some empirical tests[M]// JENSEN M C. Studies in the Theory of Capital Markets. New York: Praeger Publishers Inc., 1972: 25-28. |

| 18 | LECUN Y, BOTTOU L, BENGIO Y, et al. Gradient-based learning applied to document recognition[J]. Proceedings of the IEEE, 1998, 86(11): 2278-2324. 10.1109/5.726791 |

| 19 | KRIZHEVSKY A, SUTSKEVER I, HINTON G E. ImageNet classification with deep convolutional neural networks [C]// Proceedings of the 25th International Conference on Neural Information Processing Systems. Red Hook, NY: Curran Associates Inc., 2012: 1097-1105. |

| 20 | SRIVASTAVA N, HINTON G, KRIZHEVSKY A, et al. Dropout: a simple way to prevent neural networks from overfitting[J]. Journal of Machine Learning Research, 2014, 15: 1929-1958. |

| 21 | SIMONYAN K, ZISSERMAN A. Very deep convolutional networks for large-scale image recognition[EB/OL]. (2015-04-10) [2021-01-20]. . 10.5244/c.28.6 |

| 22 | SANDLER M, HOWARD A, ZHU M L, et al. MobileNetV2: inverted residuals and linear bottlenecks [C]// Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE, 2018: 4510-4520. 10.1109/cvpr.2018.00474 |

| 23 | LIN T Y, DOLLÁR P, GIRSHICK R, et al. Feature pyramid networks for object detection [C]// Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE, 2017: 936-944. 10.1109/cvpr.2017.106 |

| 24 | IGNATOV A, GOOL L VAN, TIMOFTE R. Replacing mobile camera ISP with a single deep learning model [C]// Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops. Piscataway: IEEE, 2020: 2275-2285. 10.1109/cvprw50498.2020.00276 |

| 25 | BLUME M E, FRIEND I. A new look at the capital asset pricing model[J]. The Journal of Finance, 1973, 28(1): 19-34. 10.1111/j.1540-6261.1973.tb01342.x |

| 26 | PURKAIT P, ZHAO C, ZACH C. SPP-net: deep absolute pose regression with synthetic views[EB/OL]. (2017-12-09) [2021-02-05]. . |

| 27 | VILLANI C. Optimal Transport: Old and New[M]. Berlin: Springer, 2009: 131-140. 10.1007/978-3-540-71050-9_28 |

| 28 | PEYRÉ G, CUTURI M. Computational optimal transport: with applications to data science[J]. Foundations and Trends in Machine Learning, 2019, 11(5/6): 355-607. 10.1561/2200000073 |

| 29 | DANILA B, YU Y, MARSH J A, et al. Optimal transport on complex networks[J]. Physical Review E, Statistical, Nonlinear, and Soft Matter Physics, 2006, 74(4 Pt 2): No.046106. 10.1103/physreve.74.046106 |

| 30 | ARJOVSKY M, CHINTALA S, BOTTOU L. Wasserstein generative adversarial networks [C]// Proceedings of 34th Machine Learning Research. New York: JMLR.org, 2017: 214-223. |

| 31 | ALBAWI S, MOHAMMED T A, AL-AZAWI S. Understanding of a convolutional neural network [C]// Proceedings of the 2017 International Conference on Engineering and Technology. Piscataway: IEEE, 2017: 1-6. 10.1109/icengtechnol.2017.8308186 |

| 32 | PEARL J, MACKENZIE D. The Book of Why: the New Science of Cause and Effect[M]. New York: Basic Books, 2018: 6-29. |

| 33 | MELLOR J, TURNER J, STORKEY A, et al. Neural architecture search without training[J]. Journal of Machine Learning Research, 2019, 20: 1-21. |

| 34 | WEI W W S. Time Series Analysis: Univariate and Multivariate Methods[M]. 2nd ed. London: Pearson, 2006: 15-80. |

| 35 | KREMERS J J M, ERICSSON N R, DOLADO J J. The power of cointegration tests[J]. Oxford Bulletin of Economics and Statistics, 1992, 54(3): 325-348. 10.1111/j.1468-0084.1992.tb00005.x |

| 36 | HYLLEBERG S, ENGLE R F, GRANGER C W J, et al. Seasonal integration and cointegration[J]. Journal of Econometrics, 1990, 44(1/2): 215-238. 10.1016/0304-4076(90)90080-d |

| 37 | MOLCHANOV P, MALLYA A, TYREE S, et al. Importance estimation for neural network pruning [C]// Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE, 2019: 11256-11264. 10.1109/cvpr.2019.01152 |

| 38 | LIU Z, SUN M J, ZHOU T H, et al. Rethinking the value of network pruning[EB/OL]. (2019-03-05) [2021-02-05]. . 10.1002/mrm.27229 |

| 39 | GEIRHOS R, RUBISCH P, MICHAELIS C, et al. ImageNet-trained CNNs are biased towards texture; increasing shape bias improves accuracy and robustness[EB/OL]. (2019-01-14) [2021-02-05]. . 10.1167/19.10.209c |

| 40 | LI X L, ZHOU Y B, WU T F, et al. Learn to grow: a continual structure learning framework for overcoming catastrophic forgetting [C]// Proceedings of the 36th International Conference on Machine Learning. New York: JMLR.org, 2019: 3925-3934. |

| 41 | WORTSMAN M, FARHADI A, RASTEGARI M. Discovering neural wirings[C/OL]// Proceedings of the 33rd Conference on Neural Information Processing Systems. [2021-01-22]. . 10.1109/cvpr42600.2020.01191 |

| 42 | KIM Y, PARK W, ROH M C, et al. GroupFace: learning latent groups and constructing group-based representations for face recognition [C]// Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE, 2020: 5620-5629. 10.1109/cvpr42600.2020.00566 |

| 43 | VASWANI A, SHAZEER N, PARMAR N, et al. Attention is all you need [C]// Proceedings of the 31st International Conference on Neural Information Processing Systems. Red Hook, NY: Curran Associates Inc., 2017: 6000-6010. 10.1016/s0262-4079(17)32358-8 |

| 44 | RUSSAKOVSKY O, DENG J, SU H, et al. ImageNet large scale visual recognition challenge[J]. International Journal of Computer Vision, 2015, 115(3): 211-252. 10.1007/s11263-015-0816-y |

| 45 | KRIZHEVSKY A. learning multiple layers of features from tiny images[R/OL]. [2021-01-25]. . |

| [1] | 秦璟, 秦志光, 李发礼, 彭悦恒. 基于概率稀疏自注意力神经网络的重性抑郁疾患诊断[J]. 《计算机应用》唯一官方网站, 2024, 44(9): 2970-2974. |

| [2] | 李力铤, 华蓓, 贺若舟, 徐况. 基于解耦注意力机制的多变量时序预测模型[J]. 《计算机应用》唯一官方网站, 2024, 44(9): 2732-2738. |

| [3] | 李烨恒, 罗光圣, 苏前敏. 基于改进YOLOv5的Logo检测算法[J]. 《计算机应用》唯一官方网站, 2024, 44(8): 2580-2587. |

| [4] | 薛凯鹏, 徐涛, 廖春节. 融合自监督和多层交叉注意力的多模态情感分析网络[J]. 《计算机应用》唯一官方网站, 2024, 44(8): 2387-2392. |

| [5] | 李晨阳, 张龙, 郑秋生, 钱少华. 基于扩散序列的多元可控文本生成[J]. 《计算机应用》唯一官方网站, 2024, 44(8): 2414-2420. |

| [6] | 刘越, 刘芳, 武奥运, 柴秋月, 王天笑. 基于自注意力机制与图卷积的3D目标检测网络[J]. 《计算机应用》唯一官方网站, 2024, 44(6): 1972-1977. |

| [7] | 赵征宇, 罗景, 涂新辉. 基于多粒度语义融合的信息检索方法[J]. 《计算机应用》唯一官方网站, 2024, 44(6): 1775-1780. |

| [8] | 徐泽鑫, 杨磊, 李康顺. 较短的长序列时间序列预测模型[J]. 《计算机应用》唯一官方网站, 2024, 44(6): 1824-1831. |

| [9] | 黄荣, 宋俊杰, 周树波, 刘浩. 基于自监督视觉Transformer的图像美学质量评价方法[J]. 《计算机应用》唯一官方网站, 2024, 44(4): 1269-1276. |

| [10] | 余杭, 周艳玲, 翟梦鑫, 刘涵. 基于预训练模型与标签融合的文本分类[J]. 《计算机应用》唯一官方网站, 2024, 44(3): 709-714. |

| [11] | 黄子麒, 胡建鹏. 实体类别增强的汽车领域嵌套命名实体识别[J]. 《计算机应用》唯一官方网站, 2024, 44(2): 377-384. |

| [12] | 王楷天, 叶青, 程春雷. 基于异构图表示的中医电子病历分类方法[J]. 《计算机应用》唯一官方网站, 2024, 44(2): 411-417. |

| [13] | 罗歆然, 李天瑞, 贾真. 基于自注意力机制与词汇增强的中文医学命名实体识别[J]. 《计算机应用》唯一官方网站, 2024, 44(2): 385-392. |

| [14] | 仇丽青, 苏小盼. 个性化多层兴趣提取点击率预测模型[J]. 《计算机应用》唯一官方网站, 2024, 44(11): 3411-3418. |

| [15] | 林翔, 金彪, 尤玮婧, 姚志强, 熊金波. 基于脆弱指纹的深度神经网络模型完整性验证框架[J]. 《计算机应用》唯一官方网站, 2024, 44(11): 3479-3486. |

| 阅读次数 | ||||||

|

全文 |

|

|||||

|

摘要 |

|

|||||