《计算机应用》唯一官方网站 ›› 2024, Vol. 44 ›› Issue (7): 2183-2191.DOI: 10.11772/j.issn.1001-9081.2023070976

魏文亮1,2( ), 王阳萍1,2, 岳彪1,2, 王安政1,2, 张哲1,2

), 王阳萍1,2, 岳彪1,2, 王安政1,2, 张哲1,2

收稿日期:2023-07-19

修回日期:2023-10-06

接受日期:2023-10-10

发布日期:2023-10-26

出版日期:2024-07-10

通讯作者:

魏文亮

作者简介:王阳萍(1973—),女,四川达州人,教授,博士,CCF会员,主要研究方向:数字图像处理、计算机视觉;基金资助:

Wenliang WEI1,2( ), Yangping WANG1,2, Biao YUE1,2, Anzheng WANG1,2, Zhe ZHANG1,2

), Yangping WANG1,2, Biao YUE1,2, Anzheng WANG1,2, Zhe ZHANG1,2

Received:2023-07-19

Revised:2023-10-06

Accepted:2023-10-10

Online:2023-10-26

Published:2024-07-10

Contact:

Wenliang WEI

About author:WANG Yangping, born in 1973, Ph. D., professor. Her research interests include digital image processing, computer vision.Supported by:摘要:

针对现有红外与可见光图像融合模型在融合过程中忽略光照因素、使用常规的融合策略,导致融合结果存在细节信息丢失、显著信息不明显等问题,提出一种基于光照权重分配和注意力的红外与可见光图像融合深度学习模型。首先,设计光照权重分配网络(IWA-Net)来估计光照分布并计算光照权重;其次,引入CM-L1范式融合策略提高像素之间的依赖关系,完成对显著特征的平滑处理;最后,由全卷积层构成解码网络,完成对融合图像的重构。在公开数据集上的融合实验结果表明,所提模型相较于对比模型,所选六种评价指标均有所提高,其中空间频率(SF)和互信息(MI)指标分别平均提高了45%和41%,有效减少边缘模糊,使融合图像具有较高的清晰度和对比度。该模型的融合结果在主客观方面均优于其他对比模型。

中图分类号:

魏文亮, 王阳萍, 岳彪, 王安政, 张哲. 基于光照权重分配和注意力的红外与可见光图像融合深度学习模型[J]. 计算机应用, 2024, 44(7): 2183-2191.

Wenliang WEI, Yangping WANG, Biao YUE, Anzheng WANG, Zhe ZHANG. Deep learning model for infrared and visible image fusion based on illumination weight allocation and attention[J]. Journal of Computer Applications, 2024, 44(7): 2183-2191.

图1 基于光照权重分配和注意力的红外与可见光图像融合深度学习模型

Fig. 1 Deep learning model for infrared and visible image fusion based on illumination weight allocation and attention

| 模型 | EN | MI | VIF | SCD | SF | |

|---|---|---|---|---|---|---|

| CSF | 5.370 6 | 2.140 8 | 0.548 2 | 1.222 0 | 7.760 8 | 0.296 4 |

| GTF | 5.405 2 | 1.442 8 | 0.524 4 | 0.823 6 | 11.075 6 | 0.428 4 |

| IFCNN | 5.813 2 | 2.201 0 | 0.666 6 | 1.324 8 | 12.194 8 | 0.543 2 |

| MDLatRR | 5.781 2 | 2.316 2 | 0.858 2 | 1.324 4 | 8.932 0 | 0.537 6 |

| DenseFuse | 5.276 2 | 2.480 8 | 0.603 8 | 1.139 4 | 7.020 8 | 0.298 0 |

| PIAFusion | 5.935 6 | 3.688 4 | 0.855 0 | 1.601 8 | 12.682 8 | 0.645 6 |

| FusionGAN | 5.403 2 | 1.766 8 | 0.473 8 | 1.007 4 | 4.872 0 | 0.140 4 |

| U2Fusion | 5.347 6 | 2.404 8 | 0.584 8 | 1.223 8 | 6.541 6 | 0.270 6 |

| IA-Fusion | 6.284 2 | 3.981 8 | 0.887 6 | 1.714 0 | 14.589 8 | 0.634 2 |

表1 “07D”组评价指标的均值

Tab. 1 Mean evaluation metrics for group “07D”

| 模型 | EN | MI | VIF | SCD | SF | |

|---|---|---|---|---|---|---|

| CSF | 5.370 6 | 2.140 8 | 0.548 2 | 1.222 0 | 7.760 8 | 0.296 4 |

| GTF | 5.405 2 | 1.442 8 | 0.524 4 | 0.823 6 | 11.075 6 | 0.428 4 |

| IFCNN | 5.813 2 | 2.201 0 | 0.666 6 | 1.324 8 | 12.194 8 | 0.543 2 |

| MDLatRR | 5.781 2 | 2.316 2 | 0.858 2 | 1.324 4 | 8.932 0 | 0.537 6 |

| DenseFuse | 5.276 2 | 2.480 8 | 0.603 8 | 1.139 4 | 7.020 8 | 0.298 0 |

| PIAFusion | 5.935 6 | 3.688 4 | 0.855 0 | 1.601 8 | 12.682 8 | 0.645 6 |

| FusionGAN | 5.403 2 | 1.766 8 | 0.473 8 | 1.007 4 | 4.872 0 | 0.140 4 |

| U2Fusion | 5.347 6 | 2.404 8 | 0.584 8 | 1.223 8 | 6.541 6 | 0.270 6 |

| IA-Fusion | 6.284 2 | 3.981 8 | 0.887 6 | 1.714 0 | 14.589 8 | 0.634 2 |

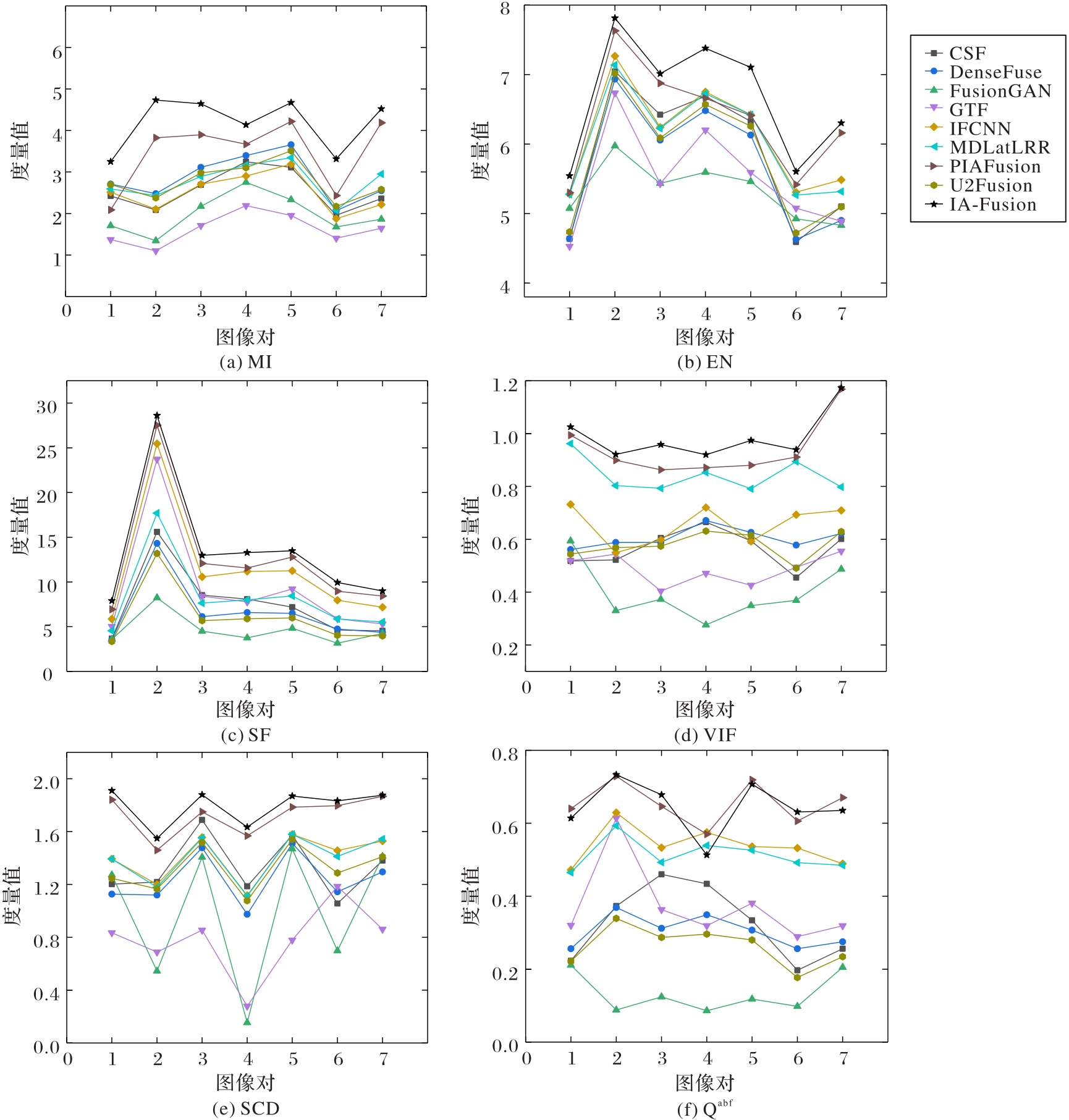

图12 各模型在复杂场景下的指标分析MSRS整体测试数据集的指标均值分析

Fig. 12 Analysis of metrics for different models in complex scenarios analysis of metric means on overall MSRS test dataset

| 模型 | EN | MI | VIF | SCD | SF | |

|---|---|---|---|---|---|---|

| CSF | 5.674 2 | 2.538 1 | 0.560 2 | 1.310 2 | 7.012 0 | 0.312 2 |

| GTF | 5.472 9 | 2.042 9 | 0.500 1 | 0.803 1 | 9.201 3 | 0.410 2 |

| IFCNN | 5.946 2 | 2.388 6 | 0.665 4 | 1.412 3 | 10.988 4 | 0.532 9 |

| MDLatRR | 5.976 3 | 2.702 4 | 0.885 9 | 1.362 4 | 8.001 2 | 0.510 2 |

| DenseFuse | 5.499 6 | 3.012 1 | 0.612 3 | 1.247 6 | 6.013 8 | 0.301 1 |

| PIAFusion | 6.283 9 | 3.623 5 | 0.922 1 | 1.821 3 | 11.194 2 | 0.694 2 |

| FusionGAN | 5.401 2 | 2.224 2 | 0.432 1 | 1.012 6 | 4.361 3 | 0.152 5 |

| U2Fusion | 5.593 8 | 2.946 9 | 0.572 9 | 1.291 1 | 5.662 4 | 0.271 4 |

| IA-Fusion | 6.561 2 | 4.574 5 | 0.979 2 | 1.912 9 | 14.216 1 | 0.634 6 |

表2 MSRS整体测试数据集的指标均值分析

Tab. 2 Analysis of metric means on overall MSRS test dataset

| 模型 | EN | MI | VIF | SCD | SF | |

|---|---|---|---|---|---|---|

| CSF | 5.674 2 | 2.538 1 | 0.560 2 | 1.310 2 | 7.012 0 | 0.312 2 |

| GTF | 5.472 9 | 2.042 9 | 0.500 1 | 0.803 1 | 9.201 3 | 0.410 2 |

| IFCNN | 5.946 2 | 2.388 6 | 0.665 4 | 1.412 3 | 10.988 4 | 0.532 9 |

| MDLatRR | 5.976 3 | 2.702 4 | 0.885 9 | 1.362 4 | 8.001 2 | 0.510 2 |

| DenseFuse | 5.499 6 | 3.012 1 | 0.612 3 | 1.247 6 | 6.013 8 | 0.301 1 |

| PIAFusion | 6.283 9 | 3.623 5 | 0.922 1 | 1.821 3 | 11.194 2 | 0.694 2 |

| FusionGAN | 5.401 2 | 2.224 2 | 0.432 1 | 1.012 6 | 4.361 3 | 0.152 5 |

| U2Fusion | 5.593 8 | 2.946 9 | 0.572 9 | 1.291 1 | 5.662 4 | 0.271 4 |

| IA-Fusion | 6.561 2 | 4.574 5 | 0.979 2 | 1.912 9 | 14.216 1 | 0.634 6 |

| 模块 | SF | SCD |

|---|---|---|

| FS-A | 10.521 4 | 1.584 2 |

| CM-L1 | 12.544 6 | 1.751 3 |

| FS-A+IWA-Net | 12.796 8 | 1.780 2 |

| CM-L1+IWA-Net | 13.986 9 | 1.965 1 |

表3 消融实验客观评价指标

Tab. 3 Objective evaluation metrics for ablation experiments

| 模块 | SF | SCD |

|---|---|---|

| FS-A | 10.521 4 | 1.584 2 |

| CM-L1 | 12.544 6 | 1.751 3 |

| FS-A+IWA-Net | 12.796 8 | 1.780 2 |

| CM-L1+IWA-Net | 13.986 9 | 1.965 1 |

| 1 | MA J, MA Y, LI C. Infrared and visible image fusion methods and applications: a survey [J]. Information Fusion, 2019, 45: 153-178. |

| 2 | P-H DINH. Combining Gabor energy with equilibrium optimizer algorithm for multi-modality medical image fusion [J]. Biomedical Signal Processing and Control, 2021, 68: 102696. |

| 3 | LI C, ZHU C, HUANG Y, et al. Cross-modal ranking with soft consistency and noisy labels for robust RGB-T tracking [C]// Proceedings of the 15th European Conference on Computer Vision. Cham: Springer, 2018: 831-847. |

| 4 | LU Y, WU Y, LIU B, et al. Cross-modality person re-identification with shared-specific feature transfer [C]// Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE, 2020: 13376-13386. |

| 5 | HA Q, WATANABE K, KARASAWA T, et al. MFNet: towards real-time semantic segmentation for autonomous vehicles with multi-spectral scenes [C]// Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems. Piscataway: IEEE, 2017: 5108-5115. |

| 6 | 朱浩然,刘云清,张文颖.基于对比度增强与多尺度边缘保持分解的红外与可见光图像融合[J].电子与信息学报, 2018, 40(6): 1294-1300. |

| ZHU H R, LIU Y Q, ZHANG W Y. Infrared and visible image fusion based on contrast enhancement and multi-scale edge-preserving decomposition [J]. Journal of Electronics & Information Technology, 2018, 40(6): 1294-1300. | |

| 7 | ZHANG Q, LIU Y, BLUM R S, et al. Sparse representation based multi-sensor image fusion for multi-focus and multi-modality images: A review [J]. Information Fusion, 2018, 40: 57-75. |

| 8 | LI S, KANG X, FANG L, et al. Pixel-level image fusion: a survey of the state of the art [J]. Information Fusion, 2017, 33: 100-112. |

| 9 | PRABHAKAR K R, SRIKAR V S, BABU R V. DeepFuse: a deep unsupervised approach for exposure fusion with extreme exposure image pairs [C]// Proceedings of the 2017 IEEE International Conference on Computer Vision. Piscataway: IEEE, 2017: 4724-4732. |

| 10 | 陈永,张娇娇,王镇.多尺度密集连接注意力的红外与可见光图像融合[J].光学精密工程, 2022, 30(18): 2253-2266. |

| CHEN Y, ZHANG J J, WANG Z. Infrared and visible image fusion based on multi-scale dense attention connection network [J]. Optics and Precision Engineering, 2022, 30(18): 2253-2266. | |

| 11 | LI H, WU X-J, DURRANI T. NestFuse: an infrared and visible image fusion architecture based on nest connection and spatial/channel attention models [J]. IEEE Transactions on Instrumentation and Measurement, 2020, 69(12): 9645-9656. |

| 12 | LI H, WU X-J, KITTLER J. RFN-Nest: an end-to-end residual fusion network for infrared and visible images [J]. Information Fusion, 2021, 73: 72-86. |

| 13 | ZHANG H, XU H, XIAO Y, et al. Rethinking the image fusion: a fast unified image fusion network based on proportional maintenance of gradient and intensity [J]. Proceedings of the AAAI Conference on Artificial Intelligence, 2020, 34(7): 12797-12804. |

| 14 | MA J, YU W, LIANG P, et al. FusionGAN: A generative adversarial network for infrared and visible image fusion [J]. Information Fusion, 2019, 48: 11-26. |

| 15 | HOU R, ZHOU D, NIE R, et al. VIF-Net: an unsupervised framework for infrared and visible image fusion [J]. IEEE Transactions on Computational Imaging, 2020, 6: 640-651. |

| 16 | LI C, SONG D, TONG R, et al. Illumination-aware faster R-CNN for robust multispectral pedestrian detection [J]. Pattern Recognition, 2019, 85: 161-171. |

| 17 | GUO X, MENG L, MEI L, et al. Multi-focus image fusion with Siamese self-attention network [J]. IET Image Processing, 2020, 14(7): 1339-1346. |

| 18 | 王莹,王晶,高岚,等.一种注意力机制优化方法及硬件加速设计[J/OL].电子学报: 1-9[2023-06-19]. . |

| WANG Y, WANG J, GAO L, et al. An improved attention mechanism algorithm model and hardware acceleration design method [J/OL]. Acta Electronica Sinica: 1-9[2023-06-19]. . | |

| 19 | AN W-B, WANG H-M. Infrared and visible image fusion with supervised convolutional neural network [J]. Optik, 2020, 219: 165120. |

| 20 | XU H, ZHANG H, MA J. Classification saliency-based rule for visible and infrared image fusion [J]. IEEE Transactions on Computational Imaging, 2021, 7: 824-836. |

| 21 | MA J, CHEN C, LI C, et al. Infrared and visible image fusion via gradient transfer and total variation minimization [J]. Information Fusion, 2016, 31: 100-109. |

| 22 | TANG L, YUAN J, ZHANG H, et al. PIAFusion: a progressive infrared and visible image fusion network based on illumination aware [J]. Information Fusion, 2022, 83/84: 79-92. |

| 23 | ZHANG Y, LIU Y, SUN P, et al. IFCNN: a general image fusion framework based on convolutional neural network [J]. Information Fusion, 2020, 54: 99-118. |

| 24 | LI H, WU X-J, KITTLER J. MDLatLRR: a novel decomposition method for infrared and visible image fusion [J]. IEEE Transactions on Image Processing, 2020, 29: 4733-4746. |

| 25 | XU H, MA J, JIANG J, et al. U2Fusion: a unified unsupervised image fusion network [J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2020, 44(1): 502-518. |

| [1] | 赵志强, 马培红, 黑新宏. 基于双重注意力机制的人群计数方法[J]. 《计算机应用》唯一官方网站, 2024, 44(9): 2886-2892. |

| [2] | 秦璟, 秦志光, 李发礼, 彭悦恒. 基于概率稀疏自注意力神经网络的重性抑郁疾患诊断[J]. 《计算机应用》唯一官方网站, 2024, 44(9): 2970-2974. |

| [3] | 李力铤, 华蓓, 贺若舟, 徐况. 基于解耦注意力机制的多变量时序预测模型[J]. 《计算机应用》唯一官方网站, 2024, 44(9): 2732-2738. |

| [4] | 黄颖, 杨佳宇, 金家昊, 万邦睿. 用于RGBT跟踪的孪生混合信息融合算法[J]. 《计算机应用》唯一官方网站, 2024, 44(9): 2878-2885. |

| [5] | 薛凯鹏, 徐涛, 廖春节. 融合自监督和多层交叉注意力的多模态情感分析网络[J]. 《计算机应用》唯一官方网站, 2024, 44(8): 2387-2392. |

| [6] | 汪雨晴, 朱广丽, 段文杰, 李书羽, 周若彤. 基于交互注意力机制的心理咨询文本情感分类模型[J]. 《计算机应用》唯一官方网站, 2024, 44(8): 2393-2399. |

| [7] | 高鹏淇, 黄鹤鸣, 樊永红. 融合坐标与多头注意力机制的交互语音情感识别[J]. 《计算机应用》唯一官方网站, 2024, 44(8): 2400-2406. |

| [8] | 李钟华, 白云起, 王雪津, 黄雷雷, 林初俊, 廖诗宇. 基于图像增强的低照度人脸检测[J]. 《计算机应用》唯一官方网站, 2024, 44(8): 2588-2594. |

| [9] | 莫尚斌, 王文君, 董凌, 高盛祥, 余正涛. 基于多路信息聚合协同解码的单通道语音增强[J]. 《计算机应用》唯一官方网站, 2024, 44(8): 2611-2617. |

| [10] | 熊武, 曹从军, 宋雪芳, 邵云龙, 王旭升. 基于多尺度混合域注意力机制的笔迹鉴别方法[J]. 《计算机应用》唯一官方网站, 2024, 44(7): 2225-2232. |

| [11] | 李欢欢, 黄添强, 丁雪梅, 罗海峰, 黄丽清. 基于多尺度时空图卷积网络的交通出行需求预测[J]. 《计算机应用》唯一官方网站, 2024, 44(7): 2065-2072. |

| [12] | 毛典辉, 李学博, 刘峻岭, 张登辉, 颜文婧. 基于并行异构图和序列注意力机制的中文实体关系抽取模型[J]. 《计算机应用》唯一官方网站, 2024, 44(7): 2018-2025. |

| [13] | 刘丽, 侯海金, 王安红, 张涛. 基于多尺度注意力的生成式信息隐藏算法[J]. 《计算机应用》唯一官方网站, 2024, 44(7): 2102-2109. |

| [14] | 徐松, 张文博, 王一帆. 基于时空信息的轻量视频显著性目标检测网络[J]. 《计算机应用》唯一官方网站, 2024, 44(7): 2192-2199. |

| [15] | 李大海, 王忠华, 王振东. 结合空间域和频域信息的双分支低光照图像增强网络[J]. 《计算机应用》唯一官方网站, 2024, 44(7): 2175-2182. |

| 阅读次数 | ||||||

|

全文 |

|

|||||

|

摘要 |

|

|||||