《计算机应用》唯一官方网站 ›› 2023, Vol. 43 ›› Issue (12): 3647-3653.DOI: 10.11772/j.issn.1001-9081.2022121881

所属专题: 人工智能

• 人工智能 • 下一篇

收稿日期:2022-12-26

修回日期:2023-03-19

接受日期:2023-03-24

发布日期:2023-04-12

出版日期:2023-12-10

通讯作者:

蔡英

作者简介:张宇(1997—),女,河北石家庄人,硕士研究生,主要研究方向:深度学习、差分隐私基金资助:

Yu ZHANG, Ying CAI( ), Jianyang CUI, Meng ZHANG, Yanfang FAN

), Jianyang CUI, Meng ZHANG, Yanfang FAN

Received:2022-12-26

Revised:2023-03-19

Accepted:2023-03-24

Online:2023-04-12

Published:2023-12-10

Contact:

Ying CAI

About author:ZHANG Yu, born in 1997, M. S. candidate. Her research interests include deep learning, differential privacy.Supported by:摘要:

针对卷积神经网络(CNN)模型的训练过程中,模型参数记忆数据部分特征导致的隐私泄露问题,提出一种CNN中基于差分隐私的动量梯度下降算法(DPGDM)。首先,在模型优化的反向传播过程中对梯度添加满足差分隐私的高斯噪声,并用加噪后的梯度值参与模型参数的更新过程,从而实现对模型整体的差分隐私保护;其次,为了减少引入差分隐私噪声对模型收敛速度的影响,设计学习率衰减策略,改进动量梯度下降算法;最后,为了降低噪声对模型准确率的影响,在模型优化过程中动态地调整噪声尺度的值,从而改变在每一轮迭代中需要对梯度加入的噪声量。实验结果表明,与DP-SGD (Differentially Private Stochastic Gradient Descent)相比,所提算法可以在隐私预算为0.3和0.5时,模型准确率分别提高约5和4个百分点。可见,所提算法提高了模型的可用性,并实现了对模型的隐私保护。

中图分类号:

张宇, 蔡英, 崔剑阳, 张猛, 范艳芳. 卷积神经网络中基于差分隐私的动量梯度下降算法[J]. 计算机应用, 2023, 43(12): 3647-3653.

Yu ZHANG, Ying CAI, Jianyang CUI, Meng ZHANG, Yanfang FAN. Gradient descent with momentum algorithm based on differential privacy in convolutional neural network[J]. Journal of Computer Applications, 2023, 43(12): 3647-3653.

| 参数名 | 不同实验数据集下的参数值 | ||

|---|---|---|---|

| MNIST | Fashion-MNIST | CIFAR-10 | |

| 批处理数据的样本数N | 250 | 256 | 1 500 |

| 模型训练轮次 | 100 | 100 | 300 |

| 学习率初始值 | 0.04 | 0.04 | 0.20 |

| 噪声尺度初始值 | 2 | 2 | 15 |

| 噪声尺度最小值 | 0.18 | 0.16 | 0.10 |

| 动量超参数m | 0.9 | 0.9 | 0.9 |

表1 实验参数

Tab. 1 Experimental parameters

| 参数名 | 不同实验数据集下的参数值 | ||

|---|---|---|---|

| MNIST | Fashion-MNIST | CIFAR-10 | |

| 批处理数据的样本数N | 250 | 256 | 1 500 |

| 模型训练轮次 | 100 | 100 | 300 |

| 学习率初始值 | 0.04 | 0.04 | 0.20 |

| 噪声尺度初始值 | 2 | 2 | 15 |

| 噪声尺度最小值 | 0.18 | 0.16 | 0.10 |

| 动量超参数m | 0.9 | 0.9 | 0.9 |

| 算法 | 准确率 | 损失的准确率 |

|---|---|---|

| NO-PRIVACY | 89.82 | 0.00 |

| DPGDM | 85.55 | 4.27 |

| DP-SGD | 78.80 | 11.02 |

| DP-PSO | 81.40 | 8.42 |

表2 不同算法在Fashion-MNIST数据集上的准确率对比 (%)

Tab.2 Accuracy comparison of different algorithms on Fashion-MNIST dataset

| 算法 | 准确率 | 损失的准确率 |

|---|---|---|

| NO-PRIVACY | 89.82 | 0.00 |

| DPGDM | 85.55 | 4.27 |

| DP-SGD | 78.80 | 11.02 |

| DP-PSO | 81.40 | 8.42 |

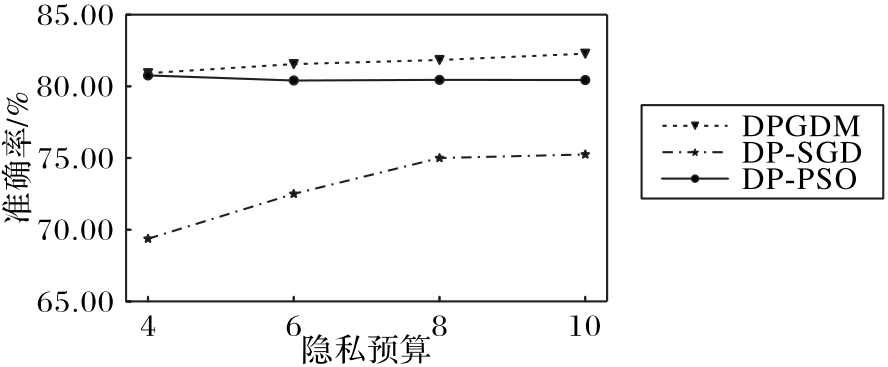

图5 不同隐私预算下不同算法在Fashion-MNIST数据集上的模型准确率对比

Fig.5 Model accuracy comparison among different algorithms under different privacy budgets on Fashion-MNIST dataset

| 算法 | 准确率 | 损失的准确率 |

|---|---|---|

| NO-PRIVACY | 70.12 | 0.00 |

| DPGDM | 68.72 | 1.40 |

| DP-SGD | 64.59 | 5.53 |

| DP-SGD with Tempered Sigmoid[ | 66.20 | 3.92 |

表3 不同算法在CIFAR-10数据集上的准确率对比 (%)

Tab. 3 Accuracy comparison of different algorithms on CIFAR-10 dataset

| 算法 | 准确率 | 损失的准确率 |

|---|---|---|

| NO-PRIVACY | 70.12 | 0.00 |

| DPGDM | 68.72 | 1.40 |

| DP-SGD | 64.59 | 5.53 |

| DP-SGD with Tempered Sigmoid[ | 66.20 | 3.92 |

| 1 | ALZUBAIDI L, ZHANG J, HUMAIDI A J, et al. Review of deep learning: concepts, CNN architectures, challenges, applications, future directions[J]. Journal of Big Data, 2021, 8: Article No. 53. 10.1186/s40537-021-00444-8 |

| 2 | SUN Y, XUE B, ZHANG M, et al. Automatically designing CNN architectures using the genetic algorithm for image classification[J]. IEEE Transactions on Cybernetics, 2020, 50(9): 3840-3854. 10.1109/tcyb.2020.2983860 |

| 3 | 季长清,高志勇,秦静,等.基于卷积神经网络的图像分类算法综述[J].计算机应用,2022,42(4):1044-1049. 10.11772/j.issn.1001-9081.2021071273 |

| JI C Q, GAO Z Y, QIN J, et al. Review of image classification algorithms based on convolutional neural network[J]. Journal of Computer Applications, 2022,42(4):1044-1049. 10.11772/j.issn.1001-9081.2021071273 | |

| 4 | HUSAIN S S, BOBER M. REMAP: multi-layer entropy-guided pooling of dense CNN features for image retrieval[J]. IEEE Transactions on Image Processing, 2019, 28(10): 5201-5213. 10.1109/tip.2019.2917234 |

| 5 | FREDRIKSON M, JHA S, RISTENPART T. Model inversion attacks that exploit confidence information and basic countermeasures[C]// Proceedings of the 22nd ACM SIGSAC Conference on Computer and Communications Security. New York: ACM, 2015: 1322-1333. 10.1145/2810103.2813677 |

| 6 | HERNANDEZ MARCANO N J, MOLLER M, HANSEN S, et al. On fully homomorphic encryption for privacy-preserving deep learning [C]// Proceedings of the 2019 IEEE Globecom Workshops. Piscataway: IEEE, 2019: 1-6. 10.1109/gcwkshps45667.2019.9024625 |

| 7 | A-T TRAN, T-D LUONG, KARNJANA J, et al. An efficient approach for privacy preserving decentralized deep learning models based on secure multi-party computation[J]. Neurocomputing, 2021, 422: 245-262. 10.1016/j.neucom.2020.10.014 |

| 8 | MEDEN B, EMERŠIČ Ž, ŠTRUC V, et al. k-Same-Net: k-anonymity with generative deep neural networks for face deidentification [J]. Entropy, 2018, 20(1): 60. 10.3390/e20010060 |

| 9 | DWORK C. Differential privacy[C]// Proceedings of the 33rd International Colloquium on Automata, Languages and Programming. Berlin: Springer, 2006: 1-12. 10.1007/11787006_1 |

| 10 | CAI Y, ZHANG Y, QU J, et al. Differential privacy preserving dynamic data release scheme based on Jensen-Shannon divergence[J]. China Communications, 2022,19(6):11-21. 10.23919/jcc.2022.06.002 |

| 11 | 屈晶晶,蔡英,范艳芳,等. 基于k-prototype聚类的差分隐私混合数据发布算法[J]. 计算机科学与探索, 2021, 15(1):109-118. 10.3778/j.issn.1673-9418.2003048 |

| QU J J, CAI Y, FAN Y F, et al. Differentially private mixed data release algorithm based on k-prototype clustering[J]. Journal of Frontiers of Computer Science and Technology, 2021,15(1):109-118. 10.3778/j.issn.1673-9418.2003048 | |

| 12 | ZHANG Y, CAI Y, ZHANG M, et al. A survey on privacy-preserving deep learning with differential privacy [C]// Proceedings of the 2021 International Conference on Big Data and Security. Singapore: Springer, 2022: 18-30. 10.1007/978-981-19-0852-1_2 |

| 13 | SHOKRI R, SHMATIKOV V. Privacy-preserving deep learning [C]// Proceedings of the 22nd ACM SIGSAC Conference on Computer and Communications Security. New York: ACM, 2015: 1310-1321. 10.1145/2810103.2813687 |

| 14 | ABADI M, CHU A, GOODFELLOW I, et al. Deep learning with differential privacy[C]// Proceedings of the 2016 ACM SIGSAC Conference on Computer and Communications Security. New York: ACM, 2016: 308-318. 10.1145/2976749.2978318 |

| 15 | YUAN D, ZHU X, WEI M, et al. Collaborative deep learning for medical image analysis with differential privacy [C]// Proceedings of the 2019 IEEE Global Communications Conference. Piscataway: IEEE, 2019: 1-6. 10.1109/globecom38437.2019.9014259 |

| 16 | ARACHCHIGE P C M, BERTOK P, KHALIL I, et al. Local differential privacy for deep learning [J]. IEEE Internet of Things Journal, 2019, 7(7): 5827-5842. 10.1109/jiot.2019.2952146 |

| 17 | GONG M, PAN K, XIE Y, et al. Preserving differential privacy in deep neural networks with relevance-based adaptive noise imposition[J]. Neural Networks, 2020, 125: 131-141. 10.1016/j.neunet.2020.02.001 |

| 18 | YU L, LIU L, PU C, et al. Differentially private model publishing for deep learning [C]// Proceedings of the 2019 IEEE Symposium on Security and Privacy. Piscataway: IEEE, 2019: 332-349. 10.1109/sp.2019.00019 |

| 19 | ZILLER A, USYNIN D, BRAREN R, et al. Medical imaging deep learning with differential privacy[J]. Scientific Reports, 2021, 11: Article No. 13524. 10.1038/s41598-021-93030-0 |

| 20 | PAPERNOT N, THAKURTA A, SONG S, et al. Tempered sigmoid activations for deep learning with differential privacy[J]. Proceedings of the AAAI Conference on Artificial Intelligence, 2021, 35(10): 9312-9321. 10.1609/aaai.v35i10.17123 |

| 21 | 李敏,李红娇,陈杰.差分隐私保护下的Adam优化算法研究[J].计算机应用与软件,2020,37(6):253-258,296. 10.3969/j.issn.1000-386x.2020.06.044 |

| LI M, LI H J, CHEN J. Adam optimization algorithm based on differential privacy protection[J]. Computer Applications and Software, 2020,37(6):253-258,296. 10.3969/j.issn.1000-386x.2020.06.044 | |

| 22 | 余方超,方贤进,张又文,等.增强深度学习中的差分隐私防御机制[J].南京大学学报(自然科学),2021,57(1):10-20. |

| YU F C, FANG X J, ZHANG Y W, et al. Enhanced differential privacy defense mechanism in deep learning[J]. Journal of Nanjing University (Natural Science), 2021,57(1):10-20. | |

| 23 | YAMASHITA R, NISHIO M, DO R K G, et al. Convolutional neural networks: an overview and application in radiology[J]. Insights into Imaging, 2018, 9(4): 611-629. 10.1007/s13244-018-0639-9 |

| 24 | KATTENBORN T, LEITLOFF J, SCHIEFER F, et al. Review on Convolutional Neural Networks (CNN) in vegetation remote sensing[J]. ISPRS Journal of Photogrammetry and Remote Sensing, 2021, 173: 24-49. 10.1016/j.isprsjprs.2020.12.010 |

| 25 | KIRANYAZ S, AVCI O, ABDELJABER O, et al. 1D convolutional neural networks and applications: a survey[J]. Mechanical Systems and Signal Processing, 2021, 151: 107398. 10.1016/j.ymssp.2020.107398 |

| 26 | MIRONOV I. Rényi differential privacy[C]// Proceedings of the 2017 IEEE 30th Computer Security Foundations Symposium. Piscataway: IEEE, 2017: 263-275. 10.1109/csf.2017.11 |

| 27 | 谭作文,张连福.机器学习隐私保护研究综述[J].软件学报,2020,31(7):2127-2156. 10.13328/j.cnki.jos.006052 |

| TAN Z W, ZHANG L F. Survey on privacy preserving techniques for machine learning [J]. Journal of Software, 2020,31(7):2127-2156. 10.13328/j.cnki.jos.006052 | |

| 28 | YOUSEFPOUR A, SHILOV I, SABLAYROLLES A, et al. Opacus: user-friendly differential privacy library in PyTorch [EB/OL]. [2022-08-22].. |

| 29 | 张攀峰,吴丹华,董明刚. 基于粒子群优化的差分隐私深度学习模型[J]. 计算机工程, 2023,49(9): 144-157. 10.19678/j.issn.1000-3428.0065590 |

| ZHANG P F, WU D H, DONG M G. Differential privacy deep learning model based on particle swarm optimization [J]. Computer Engineering, 2023,49(9): 144-157. 10.19678/j.issn.1000-3428.0065590 |

| [1] | 秦璟, 秦志光, 李发礼, 彭悦恒. 基于概率稀疏自注意力神经网络的重性抑郁疾患诊断[J]. 《计算机应用》唯一官方网站, 2024, 44(9): 2970-2974. |

| [2] | 王熙源, 张战成, 徐少康, 张宝成, 罗晓清, 胡伏原. 面向手术导航3D/2D配准的无监督跨域迁移网络[J]. 《计算机应用》唯一官方网站, 2024, 44(9): 2911-2918. |

| [3] | 潘烨新, 杨哲. 基于多级特征双向融合的小目标检测优化模型[J]. 《计算机应用》唯一官方网站, 2024, 44(9): 2871-2877. |

| [4] | 李云, 王富铕, 井佩光, 王粟, 肖澳. 基于不确定度感知的帧关联短视频事件检测方法[J]. 《计算机应用》唯一官方网站, 2024, 44(9): 2903-2910. |

| [5] | 张治政, 张啸剑, 王俊清, 冯光辉. 结合差分隐私与安全聚集的联邦空间数据发布方法[J]. 《计算机应用》唯一官方网站, 2024, 44(9): 2777-2784. |

| [6] | 李顺勇, 李师毅, 胥瑞, 赵兴旺. 基于自注意力融合的不完整多视图聚类算法[J]. 《计算机应用》唯一官方网站, 2024, 44(9): 2696-2703. |

| [7] | 陈廷伟, 张嘉诚, 王俊陆. 面向联邦学习的随机验证区块链构建[J]. 《计算机应用》唯一官方网站, 2024, 44(9): 2770-2776. |

| [8] | 黄云川, 江永全, 黄骏涛, 杨燕. 基于元图同构网络的分子毒性预测[J]. 《计算机应用》唯一官方网站, 2024, 44(9): 2964-2969. |

| [9] | 赵宇博, 张丽萍, 闫盛, 侯敏, 高茂. 基于改进分段卷积神经网络和知识蒸馏的学科知识实体间关系抽取[J]. 《计算机应用》唯一官方网站, 2024, 44(8): 2421-2429. |

| [10] | 张春雪, 仇丽青, 孙承爱, 荆彩霞. 基于两阶段动态兴趣识别的购买行为预测模型[J]. 《计算机应用》唯一官方网站, 2024, 44(8): 2365-2371. |

| [11] | 刘禹含, 吉根林, 张红苹. 基于骨架图与混合注意力的视频行人异常检测方法[J]. 《计算机应用》唯一官方网站, 2024, 44(8): 2551-2557. |

| [12] | 顾焰杰, 张英俊, 刘晓倩, 周围, 孙威. 基于时空多图融合的交通流量预测[J]. 《计算机应用》唯一官方网站, 2024, 44(8): 2618-2625. |

| [13] | 石乾宏, 杨燕, 江永全, 欧阳小草, 范武波, 陈强, 姜涛, 李媛. 面向空气质量预测的多粒度突变拟合网络[J]. 《计算机应用》唯一官方网站, 2024, 44(8): 2643-2650. |

| [14] | 陈虹, 齐兵, 金海波, 武聪, 张立昂. 融合1D-CNN与BiGRU的类不平衡流量异常检测[J]. 《计算机应用》唯一官方网站, 2024, 44(8): 2493-2499. |

| [15] | 赵亦群, 张志禹, 董雪. 基于密集残差物理信息神经网络的各向异性旅行时计算方法[J]. 《计算机应用》唯一官方网站, 2024, 44(7): 2310-2318. |

| 阅读次数 | ||||||

|

全文 |

|

|||||

|

摘要 |

|

|||||