《计算机应用》唯一官方网站 ›› 2022, Vol. 42 ›› Issue (2): 333-342.DOI: 10.11772/j.issn.1001-9081.2021020232

• 人工智能 • 下一篇

邱鑫源1,2, 叶泽聪1,2, 崔翛龙2,3( ), 高志强2

), 高志强2

收稿日期:2021-02-09

修回日期:2021-04-13

接受日期:2021-04-20

发布日期:2022-02-11

出版日期:2022-02-10

通讯作者:

崔翛龙

作者简介:邱鑫源(1999—),女,江西南昌人,硕士研究生,主要研究方向:联邦学习、深度学习;基金资助:

Xinyuan QIU1,2, Zecong YE1,2, Xiaolong CUI2,3( ), Zhiqiang GAO2

), Zhiqiang GAO2

Received:2021-02-09

Revised:2021-04-13

Accepted:2021-04-20

Online:2022-02-11

Published:2022-02-10

Contact:

Xiaolong CUI

About author:QIU Xinyuan, born in 1999, M. S. candidate. Her research interests include federated learning, deep learning.Supported by:摘要:

为了解决数据共享需求与隐私保护要求之间不可调和的矛盾,联邦学习应运而生。联邦学习作为一种分布式机器学习,其中的参与方与中央服务器之间需要不断交换大量模型参数,而这造成了较大通信开销;同时,联邦学习越来越多地部署在通信带宽有限、电量有限的移动设备上,而有限的网络带宽和激增的客户端数量会使通信瓶颈加剧。针对联邦学习的通信瓶颈问题,首先分析联邦学习的基本工作流程;然后从方法论的角度出发,详细介绍基于降低模型更新频率、模型压缩、客户端选择的三类主流方法和模型划分等特殊方法,并对具体优化方案进行深入的对比分析;最后,对联邦学习通信开销技术研究的发展趋势进行了总结和展望。

中图分类号:

邱鑫源, 叶泽聪, 崔翛龙, 高志强. 联邦学习通信开销研究综述[J]. 计算机应用, 2022, 42(2): 333-342.

Xinyuan QIU, Zecong YE, Xiaolong CUI, Zhiqiang GAO. Survey of communication overhead of federated learning[J]. Journal of Computer Applications, 2022, 42(2): 333-342.

| 目标精度/% | 不同算法的通信轮次 | ||

|---|---|---|---|

| SGD | FedSGD | FedAvg | |

| 80 | 18 000 | 3 750 | 280 |

| 82 | 31 000 | 6 600 | 630 |

| 85 | 99 000 | — | 2 000 |

表1 CIFAR-10测试集上同一目标精度下不同算法的通信轮次

Tab. 1 Communication rounds of different algorithms with same target accuracy on CIFAR-10 test set

| 目标精度/% | 不同算法的通信轮次 | ||

|---|---|---|---|

| SGD | FedSGD | FedAvg | |

| 80 | 18 000 | 3 750 | 280 |

| 82 | 31 000 | 6 600 | 630 |

| 85 | 99 000 | — | 2 000 |

| 模型 | E | B | u | 通信轮次 | |

|---|---|---|---|---|---|

| IID | non-IID | ||||

| FedSGD | 1 | ∞ | 1.0 | 626 | 483 |

| FedAvg | 5 | ∞ | 5.0 | 179 | 1 000 |

| 1 | 50 | 12.0 | 65 | 600 | |

| 20 | ∞ | 20.0 | 234 | 672 | |

| 1 | 10 | 60.0 | 34 | 350 | |

| 5 | 50 | 60.0 | 29 | 334 | |

| 20 | 50 | 240.0 | 32 | 426 | |

| 5 | 10 | 300.0 | 20 | 229 | |

| 20 | 10 | 1 200.0 | 18 | 173 | |

表2 MNIST测试集上99%目标精度下FedSGD与FedAvg所需通信轮次[12]

Tab. 2 FedSGD and FedAvg communication rounds under 99% target accuracy on MNIST test set[12]

| 模型 | E | B | u | 通信轮次 | |

|---|---|---|---|---|---|

| IID | non-IID | ||||

| FedSGD | 1 | ∞ | 1.0 | 626 | 483 |

| FedAvg | 5 | ∞ | 5.0 | 179 | 1 000 |

| 1 | 50 | 12.0 | 65 | 600 | |

| 20 | ∞ | 20.0 | 234 | 672 | |

| 1 | 10 | 60.0 | 34 | 350 | |

| 5 | 50 | 60.0 | 29 | 334 | |

| 20 | 50 | 240.0 | 32 | 426 | |

| 5 | 10 | 300.0 | 20 | 229 | |

| 20 | 10 | 1 200.0 | 18 | 173 | |

| 模型 | E | B | u | 通信轮次 | |

|---|---|---|---|---|---|

| IID | non-IID | ||||

| FedSGD | 1 | ∞ | 1.0 | 2 488 | 3 906 |

| FedAvg | 1 | 50 | 1.5 | 1 635 | 549 |

| 5 | ∞ | 5.0 | 613 | 597 | |

| 1 | 10 | 7.4 | 460 | 164 | |

| 5 | 50 | 7.4 | 401 | 152 | |

| 5 | 10 | 37.1 | 192 | 41 | |

表3 SHAKESPEARE测试集上54%目标精度下FedSGD与FedAvg所需通信轮次[12]

Tab. 3 FedSGD and FedAvg communication rounds under 54% target accuracy on SHAKESPEARE test set[12]

| 模型 | E | B | u | 通信轮次 | |

|---|---|---|---|---|---|

| IID | non-IID | ||||

| FedSGD | 1 | ∞ | 1.0 | 2 488 | 3 906 |

| FedAvg | 1 | 50 | 1.5 | 1 635 | 549 |

| 5 | ∞ | 5.0 | 613 | 597 | |

| 1 | 10 | 7.4 | 460 | 164 | |

| 5 | 50 | 7.4 | 401 | 152 | |

| 5 | 10 | 37.1 | 192 | 41 | |

| 模型 | 数据集 | 参数量 | 单次迭代耗时/s | |

|---|---|---|---|---|

| FedAvg | Overlap-FedAvg | |||

| MLP | MNIST | 199 210 | 31.20 | 28.85 |

| MnistNet | FMNIST | 1 199 882 | 32.96 | 28.31 |

| MnistNet | EMNIST | 1 199 882 | 47.19 | 42.15 |

| CNNCifar | CIFAR-10 | 878 538 | 48.07 | 45.33 |

| VGGR | CIFAR-10 | 2 440 394 | 64.40 | 49.33 |

| ResNetR | CIFAR-10 | 11 169 162 | 156.88 | 115.31 |

| ResNetR | CIFAR-100 | 11 169 162 | 156.02 | 115.30 |

| Transformer | WIKITEXT-2 | 13 828 478 | 133.19 | 87.90 |

表4 Overlap-FedAvg与FedAvg平均每次迭代耗时对比[18]

Tab. 4 Comparison of average wall-clock time of Overlap-FedAvg and FedAvg for one iteration[18]

| 模型 | 数据集 | 参数量 | 单次迭代耗时/s | |

|---|---|---|---|---|

| FedAvg | Overlap-FedAvg | |||

| MLP | MNIST | 199 210 | 31.20 | 28.85 |

| MnistNet | FMNIST | 1 199 882 | 32.96 | 28.31 |

| MnistNet | EMNIST | 1 199 882 | 47.19 | 42.15 |

| CNNCifar | CIFAR-10 | 878 538 | 48.07 | 45.33 |

| VGGR | CIFAR-10 | 2 440 394 | 64.40 | 49.33 |

| ResNetR | CIFAR-10 | 11 169 162 | 156.88 | 115.31 |

| ResNetR | CIFAR-100 | 11 169 162 | 156.02 | 115.30 |

| Transformer | WIKITEXT-2 | 13 828 478 | 133.19 | 87.90 |

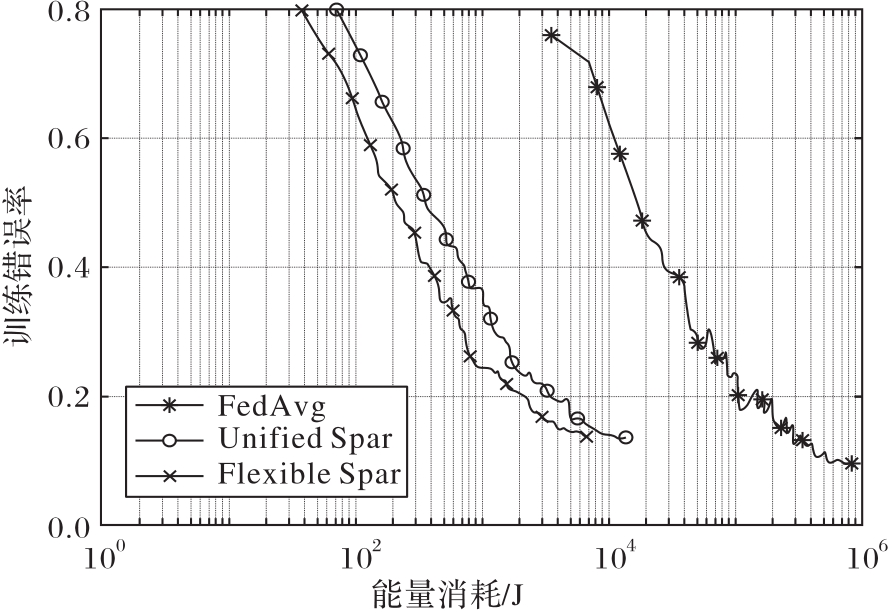

图6 同一目标精度下Flexible Spar、Unified Spar和FedAvg能耗对比[11]

Fig. 6 Energy consumption comparison of Flexible Spar, Unified Spar and FedAvg under same target accuracy[11]

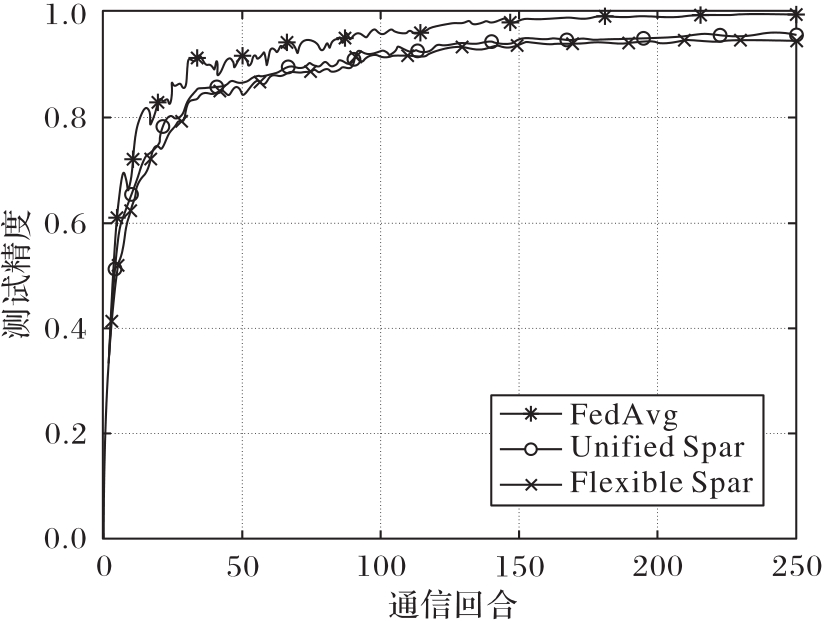

图7 同一目标精度下Flexible Spar、Unified Spar和 FedAvg所需通信次数对比[11]

Fig. 7 Communication times comparison of Flexible Spar, Unified Spar and FedAvg under same target accuracy[11]

图8 Flexible Spar等算法能耗、精度、通信次数对比[22]

Fig. 8 Comparison of Flexible Spar and other algorithms on energy consumption, precision and communication times[22]

图9 CIFAR-100以及CIFAR-10测试集上FWQ等算法的精度、能耗对比[27]

Fig. 9 Comparison of accuracy and energy overhead of FWQ and other algorithms on CIFAR-100 and CIFAR-10 test sets[27]

| 种类 | IID | non-IID | dispatch |

|---|---|---|---|

| FedAvg | 83.75 | 83.41 | 79.94 |

| HDAFL | 83.70 | 80.21 | 44.20 |

| DH | 85.44 | 85.12 | 74.95 |

| DH+GS | 84.30 | 84.44 | 85.62 |

表5 TTC数据集上DH+GS等算法的模型精度比较[46] (%)

Tab. 5 Comparison of model precision of DH+GS and other algorithms on TTC dataset[46]

| 种类 | IID | non-IID | dispatch |

|---|---|---|---|

| FedAvg | 83.75 | 83.41 | 79.94 |

| HDAFL | 83.70 | 80.21 | 44.20 |

| DH | 85.44 | 85.12 | 74.95 |

| DH+GS | 84.30 | 84.44 | 85.62 |

| 1 | YANG Q, LIU Y, CHEN T, et al. Federated machine learning: concept and applications[J]. ACM Transactions on Intelligent Systems and Technology, 2019, 10(2): No.12. 10.1145/3298981 |

| 2 | KAIROUZ P, MCMAHAN H B, AVENT B, et al. Advances and open problems in federated learning[EB/OL]. (2021-03-09) [2021-03-26]. . 10.1561/2200000083 |

| 3 | LI T, SAHU A K, TALWALKAR A, et al. Federated learning: challenges, methods, and future directions[J]. IEEE Signal Processing Magazine, 2020, 37(3): 50-60. 10.1109/msp.2020.2975749 |

| 4 | 王健宗,孔令炜,黄章成,等.联邦学习隐私保护研究进展[J].大数据, 2021, 7(3): 130-149. 10.1145/3469968.3469985 |

| WANG J Z, KONG L W, HUANG Z C, et al. Research advances on privacy protection of federated learning[J]. Big Data Research, 2021, 7(3): 130-149. 10.1145/3469968.3469985 | |

| 5 | 陈兵,成翔,张佳乐,等.联邦学习安全与隐私保护综述[J].南京航空航天大学学报, 2020, 52(5): 675-684. 10.16356/j.1005 |

| CHEN B, CHENG X, ZHANG J L, et al. Survey of security and privacy in federated learning[J]. Journal of Nanjing University of Aeronautics and Astronautics, 2020, 52(5): 675-684. 10.16356/j.1005 | |

| 6 | 周俊,方国英,吴楠.联邦学习安全与隐私保护研究综述[J].西华大学学报(自然科学版), 2020, 39(4): 9-17. 10.12198/j.issn.1673?159X.3607 |

| ZHOU J, FANG G Y, WU N. Survey on security and privacy-preserving in federated learning[J]. Journal of Xinhua University (Natural Science Edition), 2020, 39(4): 9-17. 10.12198/j.issn.1673?159X.3607 | |

| 7 | LI L, FAN Y X, TSE M, et al. A review of applications in federated learning[J]. Computers and Industrial Engineering, 2020, 149: No.106854. 10.1016/j.cie.2020.106854 |

| 8 | 刘耕,赵立君,陈庆勇,等.联邦学习在5G云边协同场景中的原理和应用综述[J].通讯世界, 2020, 27(7): 50-52. 10.3969/j.issn.1006-4222.2020.07.026 |

| LIU G, ZHAO L J, CHEN Q Y, et al. Summary of principles and applications of federated learning in 5G cloud-edge collaboration scenarios[J]. Telecom World, 2020, 27(7): 50-52. 10.3969/j.issn.1006-4222.2020.07.026 | |

| 9 | 王亚珅.面向数据共享交换的联邦学习技术发展综述[J].无人系统技术, 2019, 2(6): 58-62. 10.1109/icicas48597.2019.00162 |

| WANG Y S. A survey on federated learning for data sharing and exchange[J]. Unmanned Systems Technology, 2019, 2(6): 58-62. 10.1109/icicas48597.2019.00162 | |

| 10 | 王健宗,孔令炜,黄章成,等.联邦学习算法综述[J].大数据, 2020, 6(6): 64-82. |

| WANG J Z, KONG L W, HUANG Z C, et al. Research review of federated learning algorithms[J]. Big Data Research, 2020, 6(6): 64-82. | |

| 11 | SHI D, LI L, CHEN R, et al. Towards energy efficient federated learning over 5G+ mobile devices[EB/OL]. (2021-01-13) [2021-01-26]. . 10.1155/2021/2537546 |

| 12 | MCMAHAN B, MOORE E, RAMAGE D, et al. Communication-efficient learning of deep networks from decentralized data [C]// Proceedings of the 20th International Conference on Artificial Intelligence and Statistics. New York: JMLR.org, 2017: 1273-1282. |

| 13 | ALISTARH D, GRUBIC D, LI J Z, et al. QSGD: communication-efficient SGD via gradient quantization and encoding [C]// Proceedings of the 31st International Conference on Neural Information Processing Systems. Red Hook, NY: Curran Associates Inc., 2017: 1707-1718. |

| 14 | KONEČNÝ J. Stochastic, distributed and federated optimization for machine learning[D/OL]. (2017-07-04) [2021-01-26]. . |

| 15 | KONEČNÝ J, MCMAHAN H B, YU F X, et al. Federated learning: strategies for improving communication efficiency[EB/OL]. (2017-10-30) [2021-01-26]. . |

| 16 | LI T, SAHU A K, ZAHEER M, et al. Federated optimization for heterogeneous networks[EB/OL]. [2021-01-26]. . |

| 17 | SHI W Q, ZHOU S, NIU Z S, et al. Joint device scheduling and resource allocation for latency constrained wireless federated learning[J]. IEEE Transactions on Wireless Communications, 2021, 20(1): 453-467. 10.1109/twc.2020.3025446 |

| 18 | ZHOU Y H, YE Q, LV J C. Communication-efficient federated learning with compensated Overlap-FedAvg[J]. IEEE Transactions on Parallel and Distributed Systems, 2022, 33(1): 192-205. 10.1109/tpds.2021.3090331 |

| 19 | KONEČNÝ J, MCMAHAN H B, RAMAGE D, et al. Federated optimization: distributed machine learning for on-device intelligence[EB/OL]. (2016-10-08) [2021-01-26]. . |

| 20 | DINH C T, TRAN N H, NGUYEN M N H, et al. Federated learning over wireless networks: convergence analysis and resource allocation[J]. IEEE/ACM Transactions on Networking, 2021, 29(1): 398-409. 10.1109/tnet.2020.3035770 |

| 21 | SATTLER F, WIEDEMANN S, MÜLLER K R, et al. Robust and communication-efficient federated learning from non-IID data[J]. IEEE Transactions on Neural Networks and Learning Systems, 2020, 31(9): 3400-3413. 10.1109/tnnls.2019.2944481 |

| 22 | LI L, SHI D, HOU R H, et al. To talk or to work: flexible communication compression for energy efficient federated learning over heterogeneous mobile edge devices[EB/OL]. (2020-12-22) [2021-01-26]. . 10.1109/infocom42981.2021.9488839 |

| 23 | HE Y, ZENK M, FRITZ M. CosSGD: nonlinear quantization for communication-efficient federated learning[EB/OL]. (2020-12-15) [2021-01-26]. . |

| 24 | KARIMIREDDY S P, REBJOCK Q, STICH S, et al. Error feedback fixes signSGD and other gradient compression schemes [C]// Proceedings of the 36th International Conference on Machine Learning. New York: JMLR.org, 2019: 3252-3261. |

| 25 | LIN Y J, HAN S, MAO H Z, et al. Deep gradient compression: reducing the communication bandwidth for distributed training[EB/OL]. (2020-06-23) [2021-01-26]. . |

| 26 | BERNSTEIN J, WANG Y X, AZIZZADENESHELI K, et al. signSGD: compressed optimisation for non-convex problems [C]// Proceedings of the 35th International Conference on Machine Learning. New York: JMLR.org, 2018: 560-569. |

| 27 | CHEN R, LI L, XUE K P, et al. To talk or to work: energy efficient federated learning over mobile devices via the weight quantization and 5G transmission co-design[EB/OL]. (2020-12-21) [2021-01-26]. . |

| 28 | CHANG W T, TANDON R. Communication efficient federated learning over multiple access channels[EB/OL]. (2020-01-23) [2021-01-26]. . |

| 29 | AJI A F, HEAFIELD K. Sparse communication for distributed gradient descent [C]// Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing. Stroudsburg, PA: Association for Computational Linguistics, 2017: 440-445. 10.18653/v1/d17-1045 |

| 30 | WANGNI J Q, WANG J L, LIU J, et al. Gradient sparsification for communication-efficient distributed optimization [C]// Proceedings of the 32nd International Conference on Neural Information Processing Systems. Red Hook, NY: Curran Associates Inc., 2018: 1306-1316. 10.1145/3301326.3301347 |

| 31 | WU H D, WANG P. Fast-convergent federated learning with adaptive weighting[J]. IEEE Transactions on Cognitive Communications and Networking, 2021, 7(4): 1078-1088. 10.1109/tccn.2021.3084406 |

| 32 | HINTON G, VINYALS O, DEAN J. Distilling the knowledge in a neural network[EB/OL]. (2015-03-09) [2021-01-26]. . |

| 33 | JEONG E, OH S, KIM H, et al. Communication-efficient on-device machine learning: federated distillation and augmentation under non-IID private data[EB/OL]. (2018-11-28) [2021-01-26]. . 10.1109/mis.2020.3028613 |

| 34 | RAHBAR A, PANAHI A, BHATTACHARYYA C, et al. On the unreasonable effectiveness of knowledge distillation: analysis in the kernel regime — long version[EB/OL]. (2020-09-25) [2021-01-26]. . |

| 35 | PHUONG M, LAMPERT C. Towards understanding knowledge distillation [C]// Proceedings of the 36th International Conference on Machine Learning. New York: JMLR.org, 2019: 5142-5151. 10.1109/iccv.2019.00144 |

| 36 | SATTLER F, MARBAN A, RISCHKE R, et al. Communication-efficient federated distillation[EB/OL]. (2020-12-01) [2021-01-26]. . 10.1109/tnse.2021.3081748 |

| 37 | YANG K, JIANG T, SHI Y M, et al. Federated learning based on over-the-air computation [C]// Proceedings of the 2019 IEEE International Conference on Communications. Piscataway: IEEE, 2019: 1-6. 10.1109/icc.2019.8761429 |

| 38 | NISHIO T, YONETANI R. Client selection for federated learning with heterogeneous resources in mobile edge [C]// Proceedings of the 2019 IEEE International Conference on Communications. Piscataway: IEEE, 2019: 1-7. 10.1109/icc.2019.8761315 |

| 39 | YOSHIDA N, NISHIO T, MORIKURA M, et al. Hybrid-FL for wireless networks: cooperative learning mechanism using non-IID data [C]// Proceedings of the 2020 IEEE International Conference on Communications. Piscataway: IEEE, 2020: 1-7. 10.1109/icc40277.2020.9149323 |

| 40 | HUANG T S, LIN W W, LI K Q, et al. Stochastic client selection for federated learning with volatile clients[EB/OL]. (2020-11-17) [2021-01-26]. . 10.1109/tpds.2020.3040887 |

| 41 | XIA S H, ZHU J Y, YANG Y H, et al. Fast convergence algorithm for analog federated learning [C]// Proceedings of the 2021 IEEE Conference on Computer Communications. Piscataway: IEEE, 2021: 1-6. 10.1109/icc42927.2021.9500875 |

| 42 | TANG S H, ZHANG C, OBANA S. Multi-slot over-the-air computation in fading channels[EB/OL]. (2020-10-23) [2021-01-26]. . 10.1109/access.2021.3070901 |

| 43 | HU C, JIANG J, WANG Z. Decentralized federated learning: a segmented gossip approach[EB/OL]. [2021-01-26]. . 10.3390/electronics9030440 |

| 44 | BOUACIDA N, HOU J H, ZANG H, et al. Adaptive federated dropout: improving communication efficiency and generalization for federated learning [C]// Proceedings of the 2021 IEEE Conference on Computer Communications Workshops. Piscataway: IEEE, 2021: 1-6. 10.1109/infocomwkshps51825.2021.9484526 |

| 45 | CALDAS S, KONEČNỲ J, MCMAHAN H B, et al. Expanding the reach of federated learning by reducing client resource requirements[EB/OL]. (2019-01-08) [2021-01-26]. . |

| 46 | LI D W, CHANG Q L, PANG L X, et al. More industry-friendly: federated learning with high efficient design[EB/OL]. (2020-12-16) [2021-01-26]. . |

| 47 | TRAN N H, BAO W, ZOMAYA A, et al. Federated learning over wireless networks: optimization model design and analysis [C]// Proceedings of the 2019 IEEE Conference on Computer Communications. Piscataway: IEEE, 2019: 1387-1395. 10.1109/infocom.2019.8737464 |

| 48 | AMIRI M M, GÜNDÜZ D. Federated learning over wireless fading channels[J]. IEEE Transactions on Wireless Communications, 2020, 19(5): 3546-3557. 10.1109/twc.2020.2974748 |

| [1] | 陈廷伟, 张嘉诚, 王俊陆. 面向联邦学习的随机验证区块链构建[J]. 《计算机应用》唯一官方网站, 2024, 44(9): 2770-2776. |

| [2] | 张润莲, 张密, 武小年, 舒瑞. 基于GPU的大状态密码S盒差分性质评估方法[J]. 《计算机应用》唯一官方网站, 2024, 44(9): 2785-2790. |

| [3] | 沈哲远, 杨珂珂, 李京. 基于双流神经网络的个性化联邦学习方法[J]. 《计算机应用》唯一官方网站, 2024, 44(8): 2319-2325. |

| [4] | 王东炜, 刘柏辰, 韩志, 王艳美, 唐延东. 基于低秩分解和向量量化的深度网络压缩方法[J]. 《计算机应用》唯一官方网站, 2024, 44(7): 1987-1994. |

| [5] | 罗玮, 刘金全, 张铮. 融合秘密分享技术的双重纵向联邦学习框架[J]. 《计算机应用》唯一官方网站, 2024, 44(6): 1872-1879. |

| [6] | 陈学斌, 任志强, 张宏扬. 联邦学习中的安全威胁与防御措施综述[J]. 《计算机应用》唯一官方网站, 2024, 44(6): 1663-1672. |

| [7] | 余孙婕, 曾辉, 熊诗雨, 史红周. 基于生成式对抗网络的联邦学习激励机制[J]. 《计算机应用》唯一官方网站, 2024, 44(2): 344-352. |

| [8] | 张祖篡, 陈学斌, 高瑞, 邹元怀. 基于标签分类的联邦学习客户端选择方法[J]. 《计算机应用》唯一官方网站, 2024, 44(12): 3759-3765. |

| [9] | 巫婕, 钱雪忠, 宋威. 基于相似度聚类和正则化的个性化联邦学习[J]. 《计算机应用》唯一官方网站, 2024, 44(11): 3345-3353. |

| [10] | 陈学斌, 屈昌盛. 面向联邦学习的后门攻击与防御综述[J]. 《计算机应用》唯一官方网站, 2024, 44(11): 3459-3469. |

| [11] | 张帅华, 张淑芬, 周明川, 徐超, 陈学斌. 基于半监督联邦学习的恶意流量检测模型[J]. 《计算机应用》唯一官方网站, 2024, 44(11): 3487-3494. |

| [12] | 尹春勇, 周永成. 双端聚类的自动调整聚类联邦学习[J]. 《计算机应用》唯一官方网站, 2024, 44(10): 3011-3020. |

| [13] | 周辉, 陈玉玲, 王学伟, 张洋文, 何建江. 基于生成对抗网络的联邦学习深度影子防御方案[J]. 《计算机应用》唯一官方网站, 2024, 44(1): 223-232. |

| [14] | 蓝梦婕, 蔡剑平, 孙岚. 非独立同分布数据下的自正则化联邦学习优化方法[J]. 《计算机应用》唯一官方网站, 2023, 43(7): 2073-2081. |

| [15] | 陈宛桢, 张恩, 秦磊勇, 洪双喜. 边缘计算下基于区块链的隐私保护联邦学习算法[J]. 《计算机应用》唯一官方网站, 2023, 43(7): 2209-2216. |

| 阅读次数 | ||||||

|

全文 |

|

|||||

|

摘要 |

|

|||||