《计算机应用》唯一官方网站 ›› 2025, Vol. 45 ›› Issue (11): 3510-3518.DOI: 10.11772/j.issn.1001-9081.2024121834

收稿日期:2024-12-30

修回日期:2025-01-17

接受日期:2025-01-24

发布日期:2025-02-14

出版日期:2025-11-10

通讯作者:

云健

作者简介:高新茹(2001—),女,辽宁大连人,硕士研究生,主要研究方向:联邦学习基金资助:

Jian YUN( ), Xinru GAO, Tao LIU, Wenjie BI

), Xinru GAO, Tao LIU, Wenjie BI

Received:2024-12-30

Revised:2025-01-17

Accepted:2025-01-24

Online:2025-02-14

Published:2025-11-10

Contact:

Jian YUN

About author:GAO Xinru, born in 2001, M. S. candidate. Her research interests include federated learning.Supported by:摘要:

针对在联邦学习中实施隐私保护机制会加剧系统通信负担,而当试图提升系统通信效率时,又会牺牲模型精度的问题,设计了一种兼顾高效性和安全性的联邦学习方案FedPSR(Federated Parameter Sparsification with secure aggregation and Reconstruction)。该方案旨在平衡由时间复杂度与通信开销构成的模型通信效率和隐私安全性。首先,利用稀疏三元压缩(STC)算法的参数稀疏化策略将待上传的模型参数压缩为三元组形式,以减少数据传输量;其次,为弥补因参数压缩带来的信息损失,采用错误反馈机制将上一轮压缩产生的误差累加至下一轮本地更新后的梯度;最后,采用Paillier同态加密技术保证了模型在高效通信前提下的参数传输及聚合过程的隐私安全。在多个公开数据集上将FedPSR与当前前沿方案在独立同分布(IID)及非独立同分布(Non-IID)的数据场景下进行对比分析,实验结果表明,FedPSR解决了现存方案无法在时间复杂度、通信开销、隐私保护间取得平衡的问题,且在3个主流数据集的IID与Non-IID条件下都有效提高了模型的精度、收敛性及鲁棒性。

中图分类号:

云健, 高新茹, 刘涛, 毕文洁. 兼顾高效性和安全性的新型联邦学习方案[J]. 计算机应用, 2025, 45(11): 3510-3518.

Jian YUN, Xinru GAO, Tao LIU, Wenjie BI. New federated learning scheme balancing efficiency and security[J]. Journal of Computer Applications, 2025, 45(11): 3510-3518.

| 数据量/B | LWE | BFV | Paillier | |||

|---|---|---|---|---|---|---|

| 加密时间/s | 解密时间/s | 加密时间/s | 解密时间/s | 加密时间/s | 解密时间/s | |

| 2 000 | 592.46 | 4.98 | 108.11 | 53.81 | 64.35 | 18.34 |

| 20 000 | 6 131.32 | 51.98 | 1 101.31 | 549.68 | 612.34 | 177.21 |

| 40 000 | — | — | 2 059.33 | 1 083.56 | 1 198.54 | 694.66 |

表1 不同加密算法在同一算力下的加密和解密时间对比

Tab. 1 Comparison of encryption and decryption times for different encryption algorithms under same computing power

| 数据量/B | LWE | BFV | Paillier | |||

|---|---|---|---|---|---|---|

| 加密时间/s | 解密时间/s | 加密时间/s | 解密时间/s | 加密时间/s | 解密时间/s | |

| 2 000 | 592.46 | 4.98 | 108.11 | 53.81 | 64.35 | 18.34 |

| 20 000 | 6 131.32 | 51.98 | 1 101.31 | 549.68 | 612.34 | 177.21 |

| 40 000 | — | — | 2 059.33 | 1 083.56 | 1 198.54 | 694.66 |

| 符号 | 数值 | 定义 |

|---|---|---|

| 100.00 | 客户端设备数 | |

| 0.10 | 参与的客户端比例 | |

| 10.00 | 本地训练批的大小 | |

| 10.00 | 本地训练次数 | |

| 0.01 | 学习率 |

表2 实验中的联邦学习参数设置

Tab. 2 Parameter settings of federated learning in experiments

| 符号 | 数值 | 定义 |

|---|---|---|

| 100.00 | 客户端设备数 | |

| 0.10 | 参与的客户端比例 | |

| 10.00 | 本地训练批的大小 | |

| 10.00 | 本地训练次数 | |

| 0.01 | 学习率 |

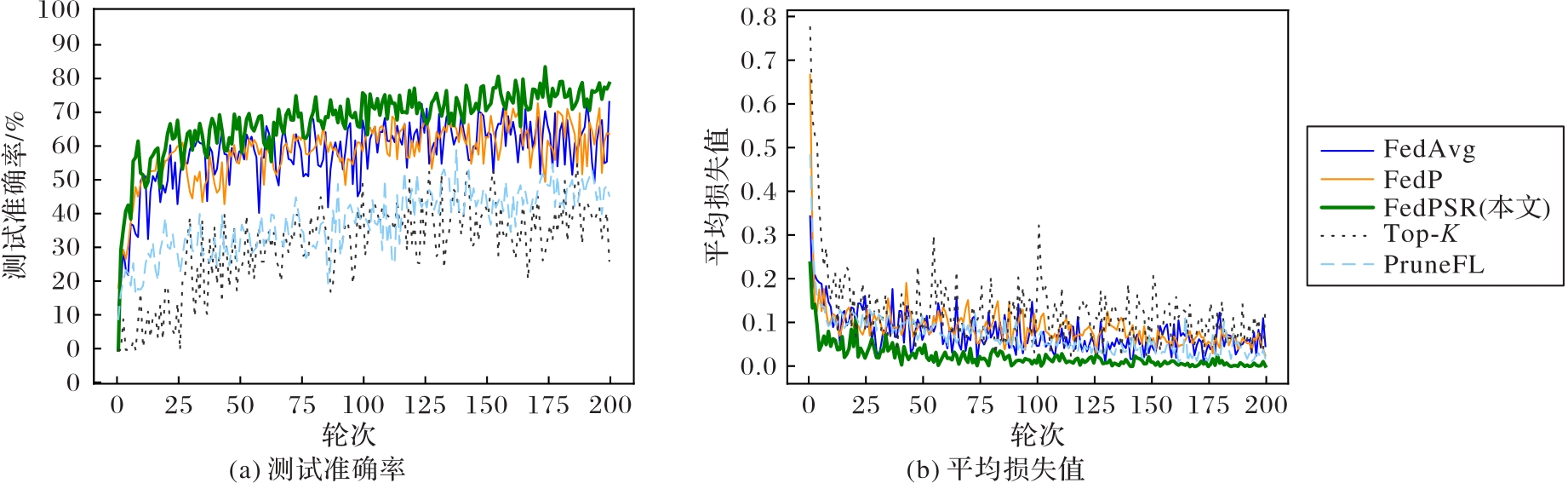

图10 Fashion-MNIST数据集上Non-IID条件下FedPSR与基线方案的性能对比

Fig. 10 Performance comparison of FedPSR and baseline schemes on Fashion-MNIST dataset under Non-IID condition

| 方案 | 通信时间/s | 上传模型大小/MB |

|---|---|---|

| FedAvg | 29.843 | 0.083 319 |

| FedPSR | 33.877 | 0.000 365 |

| Top-K | 33.412 | 0.000 322 |

| PruneFL | 35.029 | 0.000 358 |

表3 一次迭代训练中的通信时间开销及模型大小

Tab. 3 Transmission time overhead and model size in one iteration training

| 方案 | 通信时间/s | 上传模型大小/MB |

|---|---|---|

| FedAvg | 29.843 | 0.083 319 |

| FedPSR | 33.877 | 0.000 365 |

| Top-K | 33.412 | 0.000 322 |

| PruneFL | 35.029 | 0.000 358 |

| [1] | RODRIGUES T K, SUTO K, KATO N. Edge cloud server deployment with transmission power control through machine learning for 6G internet of things[J]. IEEE Transactions on Emerging Topics in Computing, 2021, 9(4): 2099-2108. |

| [2] | McMAHAN H B, MOORE E, RAMAGE D, et al. Communication-efficient learning of deep networks from decentralized data[C]// Proceedings of the 20th International Conference on Artificial Intelligence and Statistics. New York: JMLR.org, 2017: 1273-1282. |

| [3] | BONAWITZ K, EICHNER H, GRIESKAMP W, et al. Towards federated learning at scale: system design[C]// Proceedings of the 2019 Machine Learning and Systems 1. Stanford: MLSys.org, 2019: 193-204. |

| [4] | PHONG L T, AONO Y, HAYASHI T, et al. Privacy-preserving deep learning via additively homomorphic encryption[J]. IEEE Transactions on Information Forensics and Security, 2018, 13(5): 1333-1345. |

| [5] | ABADI M, CHU A, GOODFELLOW I, et al. Deep learning with differential privacy[C]// Proceedings of the 2016 ACM SIGSAC Conference on Computer and Communications Security. New York: ACM, 2016: 308-318. |

| [6] | YAO A C C. How to generate and exchange secrets[C]// Proceedings of the 27th Annual Symposium on Foundations of Computer Science. Piscataway: IEEE, 1986: 162-167. |

| [7] | LI T, SAHU A K, ZAHEER M, et al. Federated optimization in heterogeneous networks[C]// Proceedings of the 2020 Machine Learning and Systems 2. Stanford: MLSys.org, 2020: 429-450. |

| [8] | ALISTARH D, GRUBIC D, LI J Z,et al. QSGD: communication-efficient SGD via gradient quantization and encoding[C]// Proceedings of the 31st International Conference on Neural Information Processing Systems. Red Hook: Curran Associates Inc., 2017:1707-1718. |

| [9] | AJI A F, HEAFIELD K. Sparse communication for distributed gradient descent[C]// Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing. Stroudsburg: ACL, 2017: 440-445. |

| [10] | SATTLER F, WIEDEMANN S, MÜLLER K R, et al. Robust and communication-efficient federated learning from non-i.i.d. data[J]. IEEE Transactions on Neural Networks and Learning Systems, 2020, 31(9): 3400-3413. |

| [11] | LU S, LI R, LIU W, et al. Top-k sparsification with secure aggregation for privacy-preserving federated learning[J]. Computers and Security, 2023, 124: No.102993. |

| [12] | KONEČNÝ J, McMAHAN H B, RAMAGE D, et al. Federated optimization: distributed machine learning for on-device intelligence[EB/OL]. [2024-05-26]. . |

| [13] | FANG C, GUO Y, HU Y, et al. Privacy-preserving and communication-efficient federated learning in Internet of Things[J]. Computers and Security, 2021, 103: No.102199. |

| [14] | ZHANG J, LI X, LIANG W, et al. Two-phase sparsification with secure aggregation for privacy-aware federated learning[J]. IEEE Internet of Things Journal, 2024, 11(16): 27112-27125. |

| [15] | JIANG Y, WANG S, VALLS V, et al. Model pruning enables efficient federated learning on edge devices[J]. IEEE Transactions on Neural Networks and Learning Systems, 2023, 34(12): 10374-10386. |

| [16] | ZHU X, WANG J, CHEN W, et al. Model compression and privacy preserving framework for federated learning[J]. Future Generation Computer Systems, 2023, 140: 376-389. |

| [17] | KARIMIREDDY S P, REBJOCK Q, STICH S U, et al. Error feedback fixes signSGD and other gradient compression schemes[C]// Proceedings of the 36th International Conference on Machine Learning. New York: JMLR.org, 2019: 3252-3261. |

| [18] | LI Z, HUANG Z, CHEN C, et al. Quantification of the leakage in federated learning [EB/OL]. [2024-06-08]. . |

| [19] | RIVEST R L, ADLEMAN L, DERTOUZOS M L. On data banks and privacy homomorphisms[J]. Foundations of Secure Computation, 1978, 4(11): 169-179. |

| [20] | GENTRY C. Fully homomorphic encryption using ideal lattices[C]// Proceedings of the 41st Annual ACM Symposium on Theory of Computing. New York: ACM, 2009: 169-178. |

| [21] | PAILLIER P. Public-key cryptosystems based on composite degree residuosity classes[C]// Proceedings of the 1999 International Conference on the Theory and Applications of Cryptographic Techniques, LNCS 1592. Berlin: Springer, 1999: 223-238. |

| [22] | WANG B, LI H T, GUO Y N, et al. PPFLHE: a privacy-preserving federated learning scheme with homomorphic encryption for healthcare data[J]. Applied Soft Computing, 2023, 146: No.110677. |

| [23] | PADDOCK S, ABEDTASH H, ZUMMO J, et al. Proof-of-concept study: homomorphically encrypted data can support real-time learning in personalized cancer medicine[J]. BMC Medical Informatics and Decision Making, 2019, 19: No.255. |

| [24] | ASAD M, MOUSTAFA A, ITO T. FedOpt: towards communication efficiency and privacy preservation in federated learning[J]. Applied Sciences, 2020, 10(8): No.2864. |

| [25] | LeCUN Y. The MNIST database of handwritten digits [DB/OL]. [2024-05-11]. . |

| [26] | KRIZHEVSKY A. The CIFAR-10 dataset [DS/OL]. [2024-05-11]. . |

| [27] | XIAO H, RASUL K, VOLLGRAF R. Fashion-MNIST: a novel image dataset for benchmarking machine learning algorithms[EB/OL]. [2024-05-11]. . |

| [28] | HE K, ZHANG X, REN S, et al. Deep residual learning for image recognition[C]// Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE, 2016: 770-778. |

| [1] | 张珂嘉, 方志军, 周南润, 史志才. 基于模型预分配与自蒸馏的个性化联邦学习方法[J]. 《计算机应用》唯一官方网站, 2026, 46(1): 10-20. |

| [2] | 菅银龙, 陈学斌, 景忠瑞, 钟琪, 张镇博. 联邦学习中基于条件生成对抗网络的数据增强方案[J]. 《计算机应用》唯一官方网站, 2026, 46(1): 21-32. |

| [3] | 樊娜, 罗闯, 张泽晖, 张梦瑶, 穆鼎. 基于改进生成对抗网络的车辆轨迹语义隐私保护机制[J]. 《计算机应用》唯一官方网站, 2026, 46(1): 169-180. |

| [4] | 俞浩, 范菁, 孙伊航, 金亚东, 郗恩康, 董华. 边缘异构下的联邦分割学习优化方法[J]. 《计算机应用》唯一官方网站, 2026, 46(1): 33-42. |

| [5] | 翟社平, 朱鹏举, 杨锐, 刘佳一腾. 基于区块链的物联网身份管理系统[J]. 《计算机应用》唯一官方网站, 2025, 45(9): 2873-2881. |

| [6] | 张博瀚, 吕乐, 荆军昌, 刘栋. 基于遗传算法的属性网络社区隐藏方法[J]. 《计算机应用》唯一官方网站, 2025, 45(9): 2817-2826. |

| [7] | 俞浩, 范菁, 孙伊航, 董华, 郗恩康. 联邦学习统计异质性综述[J]. 《计算机应用》唯一官方网站, 2025, 45(9): 2737-2746. |

| [8] | 苏锦涛, 葛丽娜, 肖礼广, 邹经, 王哲. 联邦学习中针对后门攻击的检测与防御方案[J]. 《计算机应用》唯一官方网站, 2025, 45(8): 2399-2408. |

| [9] | 葛丽娜, 王明禹, 田蕾. 联邦学习的高效性研究综述[J]. 《计算机应用》唯一官方网站, 2025, 45(8): 2387-2398. |

| [10] | 晏燕, 李飞飞, 吕雅琴, 冯涛. 安全高效的混洗差分隐私频率估计方法[J]. 《计算机应用》唯一官方网站, 2025, 45(8): 2600-2611. |

| [11] | 张宏扬, 张淑芬, 谷铮. 面向个性化与公平性的联邦学习算法[J]. 《计算机应用》唯一官方网站, 2025, 45(7): 2123-2131. |

| [12] | 王利娥, 林彩怡, 李永东, 傅星珵, 李先贤. 基于区块链的数字内容版权保护和公平追踪方案[J]. 《计算机应用》唯一官方网站, 2025, 45(6): 1756-1765. |

| [13] | 高改梅, 杜苗莲, 刘春霞, 杨玉丽, 党伟超, 邸国霞. 基于SM2可链接环签名的联盟链隐私保护方法[J]. 《计算机应用》唯一官方网站, 2025, 45(5): 1564-1572. |

| [14] | 张一鸣, 曹腾飞. 基于本地漂移和多样性算力的联邦学习优化算法[J]. 《计算机应用》唯一官方网站, 2025, 45(5): 1447-1454. |

| [15] | 范亚州, 李卓. 能耗约束下分层联邦学习模型质量优化的节点协作机制[J]. 《计算机应用》唯一官方网站, 2025, 45(5): 1589-1594. |

| 阅读次数 | ||||||

|

全文 |

|

|||||

|

摘要 |

|

|||||