《计算机应用》唯一官方网站 ›› 2025, Vol. 45 ›› Issue (12): 4045-4054.DOI: 10.11772/j.issn.1001-9081.2024111669

收稿日期:2024-11-26

修回日期:2025-02-15

接受日期:2025-02-21

发布日期:2025-03-04

出版日期:2025-12-10

通讯作者:

张笃振

作者简介:王斯豪(2002—),男,江苏盐城人,硕士研究生,CCF会员,主要研究方向:深度学习、医学影像处理基金资助:

Sihao WANG, Duzhen ZHANG( ), Changchang YANG

), Changchang YANG

Received:2024-11-26

Revised:2025-02-15

Accepted:2025-02-21

Online:2025-03-04

Published:2025-12-10

Contact:

Duzhen ZHANG

About author:WANG Sihao, born in 2002, M. S. candidate. His research interests include deep learning, medical image processing.Supported by:摘要:

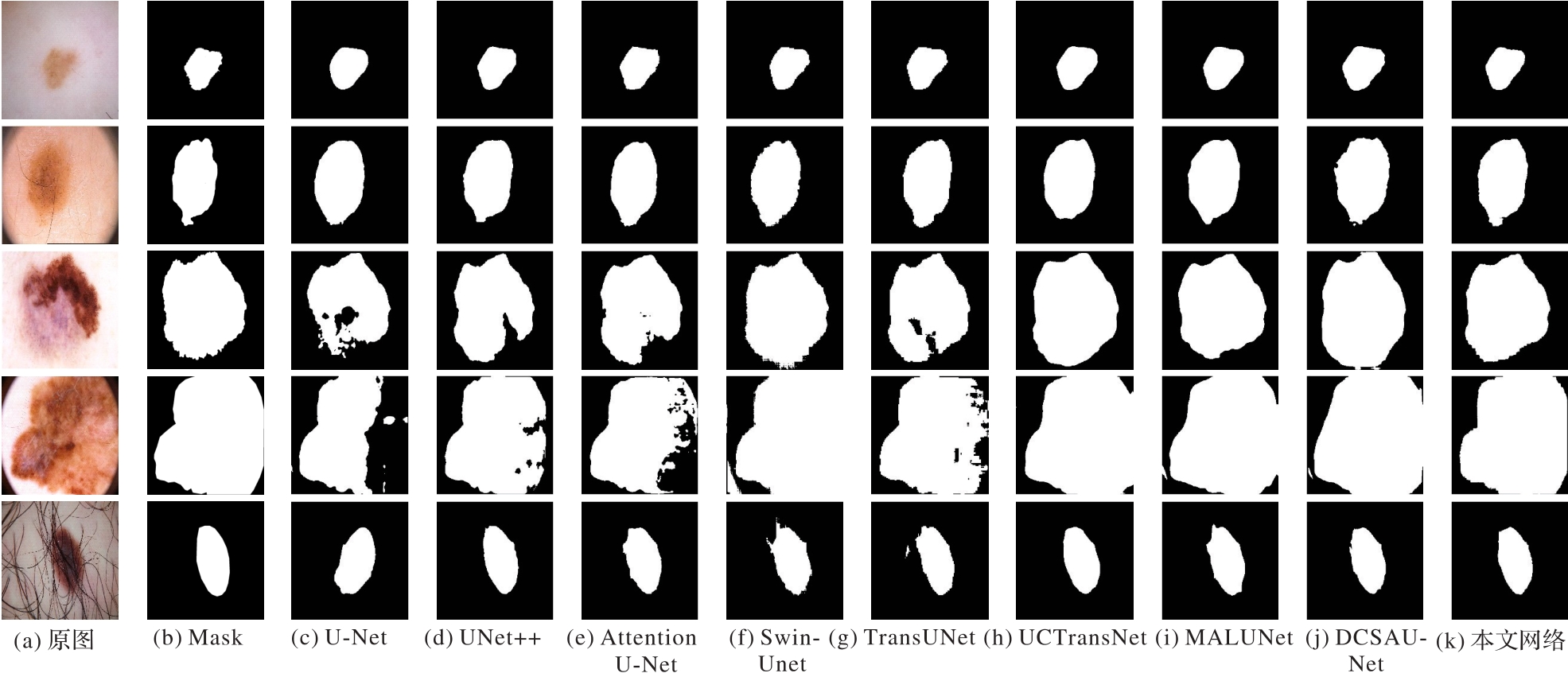

针对皮肤病灶边界模糊、存在毛发干扰、病灶大小不一等问题,提出一种基于双路径注意力机制和多尺度信息融合的皮肤病灶分割网络。首先,在编码器部分设计基于深度可分离卷积的残差门控注意力模块DGConv(Depthwise Gate Convolution),用于捕捉病灶区域的局部信息;其次,在网络瓶颈处设计多尺度上下文关系提取模块(MCEM),采用水平平均池化及垂直平均池化建模上下文信息,并融合残差空洞卷积金字塔模块捕获的多尺度特征进一步增强对病灶全局信息的理解;再次,在跳跃连接处设计双路径注意力模块用于细化病灶信息,并利用多尺度特征融合增强(MSFE)模块实现多阶段信息的交融,丰富当前阶段传输的细节特征信息;最后,在解码器部分设计特征融合模块(FM),以解决同阶段接受野失配的问题,并逐步融合编码器输出和跳跃连接传递的特征信息得到最终的分割结果。该网络在ISIC2017(International Skin Imaging Collaboration)和ISIC2018数据集上的实验结果表明,相较于皮肤病灶分割方面表现次优的网络,所提网络的Dice指标分别提高了0.09和1.09个百分点,交并比(IoU)指标分别提高0.14和1.76个百分点;与经典U-Net相比,Dice指标分别提高5.13和3.84个百分点,IoU指标分别提高了7.74和6.04个百分点。充分说明所提网络的先进性与有效性。

中图分类号:

王斯豪, 张笃振, 杨昌昌. 基于双路径注意力机制和多尺度信息融合的皮肤病灶图像分割[J]. 计算机应用, 2025, 45(12): 4045-4054.

Sihao WANG, Duzhen ZHANG, Changchang YANG. Skin lesion image segmentation based on dual-path attention mechanism and multi-scale information fusion[J]. Journal of Computer Applications, 2025, 45(12): 4045-4054.

| 网络 | ISIC2017数据集 | ISIC2018数据集 | Params/106 | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Dice/% | IoU/% | Acc/% | Spe/% | Dice/% | IoU/% | Acc/% | Spe/% | ||

| U-Net | 82.30 | 69.92 | 94.43 | 97.86 | 85.18 | 74.18 | 92.99 | 96.26 | 32.08 |

| UNet++ | 86.60 | 76.37 | 95.59 | 97.66 | 87.70 | 78.09 | 94.06 | 96.31 | 47.19 |

| Attention U-Net | 85.29 | 74.35 | 95.27 | 97.95 | 86.83 | 76.73 | 93.77 | 96.56 | 34.88 |

| Swin-Unet | 85.00 | 73.91 | 95.06 | 97.36 | 85.74 | 75.04 | 93.15 | 95.90 | 41.34 |

| TransUNet | 84.47 | 73.12 | 94.97 | 97.65 | 85.70 | 74.98 | 93.28 | 96.67 | 54.86 |

| UCTransNet | 87.01 | 77.00 | 95.66 | 97.42 | 87.14 | 77.21 | 93.76 | 97.55 | 66.24 |

| MALUNet | 86.87 | 76.79 | 95.60 | 97.33 | 96.13 | 0.175 | |||

| DCSAU-Net | 95.93 | 98.38 | 87.14 | 77.21 | 93.95 | ||||

| 本文网络 | 87.43 | 77.66 | 97.73 | 89.02 | 80.22 | 94.71 | 96.83 | 19.49 | |

表1 ISIC2017和ISIC2018数据集上各网络的分割结果对比

Tab. 1 Comparison of segmentation results for different networks on ISIC2017 and ISIC2018 datasets

| 网络 | ISIC2017数据集 | ISIC2018数据集 | Params/106 | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Dice/% | IoU/% | Acc/% | Spe/% | Dice/% | IoU/% | Acc/% | Spe/% | ||

| U-Net | 82.30 | 69.92 | 94.43 | 97.86 | 85.18 | 74.18 | 92.99 | 96.26 | 32.08 |

| UNet++ | 86.60 | 76.37 | 95.59 | 97.66 | 87.70 | 78.09 | 94.06 | 96.31 | 47.19 |

| Attention U-Net | 85.29 | 74.35 | 95.27 | 97.95 | 86.83 | 76.73 | 93.77 | 96.56 | 34.88 |

| Swin-Unet | 85.00 | 73.91 | 95.06 | 97.36 | 85.74 | 75.04 | 93.15 | 95.90 | 41.34 |

| TransUNet | 84.47 | 73.12 | 94.97 | 97.65 | 85.70 | 74.98 | 93.28 | 96.67 | 54.86 |

| UCTransNet | 87.01 | 77.00 | 95.66 | 97.42 | 87.14 | 77.21 | 93.76 | 97.55 | 66.24 |

| MALUNet | 86.87 | 76.79 | 95.60 | 97.33 | 96.13 | 0.175 | |||

| DCSAU-Net | 95.93 | 98.38 | 87.14 | 77.21 | 93.95 | ||||

| 本文网络 | 87.43 | 77.66 | 97.73 | 89.02 | 80.22 | 94.71 | 96.83 | 19.49 | |

| 网络 | ISIC2017上训练的网络 | ISIC2018上训练的网络 | ||||||

|---|---|---|---|---|---|---|---|---|

| Dice | IoU | Acc | Spe | Dice | IoU | Acc | Spe | |

| U-Net | 86.33 | 75.95 | 92.96 | 88.22 | 78.92 | 93.69 | 97.38 | |

| UNet++ | 90.82 | 83.19 | 95.08 | 98.29 | 92.61 | 86.24 | 95.92 | 97.75 |

| Attention U-Net | 89.11 | 80.36 | 94.24 | 98.22 | 90.62 | 82.85 | 94.87 | 97.43 |

| Swin-Unet | 90.56 | 82.75 | 94.87 | 97.60 | 90.80 | 83.16 | 94.86 | 96.62 |

| TransUNet | 89.02 | 80.21 | 94.17 | 98.04 | 89.29 | 80.67 | 94.26 | 97.67 |

| UCTransNet | 97.14 | 93.06 | 87.01 | 96.12 | 97.48 | |||

| MALUNet | 91.50 | 84.33 | 95.34 | 97.63 | 92.21 | 85.55 | 95.66 | 97.19 |

| DCSAU-Net | 91.44 | 84.23 | 95.29 | 97.48 | 91.36 | 84.10 | 95.37 | 98.48 |

| 本文网络 | 91.81 | 84.85 | 95.54 | 98.07 | ||||

表2 各网络在融合数据集上的分割结果对比 (%)

Tab. 2 Comparison of segmentation results for different networks on fusion dataset

| 网络 | ISIC2017上训练的网络 | ISIC2018上训练的网络 | ||||||

|---|---|---|---|---|---|---|---|---|

| Dice | IoU | Acc | Spe | Dice | IoU | Acc | Spe | |

| U-Net | 86.33 | 75.95 | 92.96 | 88.22 | 78.92 | 93.69 | 97.38 | |

| UNet++ | 90.82 | 83.19 | 95.08 | 98.29 | 92.61 | 86.24 | 95.92 | 97.75 |

| Attention U-Net | 89.11 | 80.36 | 94.24 | 98.22 | 90.62 | 82.85 | 94.87 | 97.43 |

| Swin-Unet | 90.56 | 82.75 | 94.87 | 97.60 | 90.80 | 83.16 | 94.86 | 96.62 |

| TransUNet | 89.02 | 80.21 | 94.17 | 98.04 | 89.29 | 80.67 | 94.26 | 97.67 |

| UCTransNet | 97.14 | 93.06 | 87.01 | 96.12 | 97.48 | |||

| MALUNet | 91.50 | 84.33 | 95.34 | 97.63 | 92.21 | 85.55 | 95.66 | 97.19 |

| DCSAU-Net | 91.44 | 84.23 | 95.29 | 97.48 | 91.36 | 84.10 | 95.37 | 98.48 |

| 本文网络 | 91.81 | 84.85 | 95.54 | 98.07 | ||||

| 网络 | 毛发遮挡 | 边缘模糊 | 尺度不一 | 对比度低 | 边界不规则 | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Dice | IoU | Acc | Dice | IoU | Acc | Dice | IoU | Acc | Dice | IoU | Acc | Dice | IoU | Acc | |

| U-Net | 86.64 | 76.42 | 92.76 | 76.57 | 62.04 | 88.65 | 83.71 | 71.98 | 88.76 | 76.59 | 62.06 | 90.47 | 93.02 | 86.96 | 94.98 |

| UNet++ | 91.02 | 83.53 | 94.93 | 83.71 | 71.98 | 91.57 | 90.36 | 82.43 | 92.93 | 85.49 | 74.65 | 93.53 | 94.11 | 88.88 | 95.61 |

| Attention U-Net | 89.48 | 80.96 | 94.17 | 82.35 | 70.00 | 91.00 | 87.30 | 77.46 | 90.97 | 83.16 | 71.17 | 92.67 | 93.32 | 87.47 | 95.73 |

| Swin-Unet | 88.72 | 80.96 | 93.53 | 84.96 | 73.85 | 92.13 | 91.93 | 85.07 | 93.90 | 84.95 | 73.84 | 93.32 | 94.29 | 89.20 | 95.73 |

| TransUNet | 89.48 | 80.96 | 94.13 | 81.13 | 68.25 | 90.38 | 88.12 | 78.76 | 91.47 | 82.30 | 69.92 | 92.29 | 92.60 | 86.22 | 94.51 |

| UCTransNet | 93.12 | 87.13 | 95.97 | 87.42 | 77.65 | 92.99 | 92.07 | 85.31 | 93.98 | 92.90 | 86.74 | 94.50 | |||

| MALUNet | 91.19 | 83.80 | 94.94 | 92.43 | 85.92 | 94.25 | 86.92 | 76.86 | 93.98 | 94.79 | 90.10 | 96.07 | |||

| DCSAU-Net | 92.10 | 85.35 | 95.38 | 83.89 | 72.25 | 91.37 | 94.52 | 89.60 | 95.70 | 84.58 | 73.28 | 92.82 | 93.74 | 88.22 | 95.27 |

| 本文网络 | 85.22 | 74.25 | 92.22 | 89.45 | 80.91 | 95.05 | |||||||||

表3 各网络在不同场景下的分割结果对比 (%)

Tab. 3 Comparison of segmentation results for different networks across different scenarios

| 网络 | 毛发遮挡 | 边缘模糊 | 尺度不一 | 对比度低 | 边界不规则 | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Dice | IoU | Acc | Dice | IoU | Acc | Dice | IoU | Acc | Dice | IoU | Acc | Dice | IoU | Acc | |

| U-Net | 86.64 | 76.42 | 92.76 | 76.57 | 62.04 | 88.65 | 83.71 | 71.98 | 88.76 | 76.59 | 62.06 | 90.47 | 93.02 | 86.96 | 94.98 |

| UNet++ | 91.02 | 83.53 | 94.93 | 83.71 | 71.98 | 91.57 | 90.36 | 82.43 | 92.93 | 85.49 | 74.65 | 93.53 | 94.11 | 88.88 | 95.61 |

| Attention U-Net | 89.48 | 80.96 | 94.17 | 82.35 | 70.00 | 91.00 | 87.30 | 77.46 | 90.97 | 83.16 | 71.17 | 92.67 | 93.32 | 87.47 | 95.73 |

| Swin-Unet | 88.72 | 80.96 | 93.53 | 84.96 | 73.85 | 92.13 | 91.93 | 85.07 | 93.90 | 84.95 | 73.84 | 93.32 | 94.29 | 89.20 | 95.73 |

| TransUNet | 89.48 | 80.96 | 94.13 | 81.13 | 68.25 | 90.38 | 88.12 | 78.76 | 91.47 | 82.30 | 69.92 | 92.29 | 92.60 | 86.22 | 94.51 |

| UCTransNet | 93.12 | 87.13 | 95.97 | 87.42 | 77.65 | 92.99 | 92.07 | 85.31 | 93.98 | 92.90 | 86.74 | 94.50 | |||

| MALUNet | 91.19 | 83.80 | 94.94 | 92.43 | 85.92 | 94.25 | 86.92 | 76.86 | 93.98 | 94.79 | 90.10 | 96.07 | |||

| DCSAU-Net | 92.10 | 85.35 | 95.38 | 83.89 | 72.25 | 91.37 | 94.52 | 89.60 | 95.70 | 84.58 | 73.28 | 92.82 | 93.74 | 88.22 | 95.27 |

| 本文网络 | 85.22 | 74.25 | 92.22 | 89.45 | 80.91 | 95.05 | |||||||||

图7 加载ISIC17训练权重后不同网络在融合数据集上分割结果对比

Fig. 7 Comparison of segmentation results on fusion dataset for different networks after loading ISIC17 training weights

| FM | AttMSFE | MCEM | DGConv | Dice | IoU | Acc | Spe |

|---|---|---|---|---|---|---|---|

| 85.18 | 74.18 | 92.99 | 96.26 | ||||

| √ | 86.69 | 76.50 | 93.46 | 95.36 | |||

| √ | 86.00 | 75.44 | 93.29 | 96.03 | |||

| √ | 85.97 | 75.40 | 93.34 | 96.40 | |||

| √ | 86.85 | 76.76 | 93.70 | 96.38 | |||

| √ | √ | 87.13 | 77.20 | 93.83 | 96.40 | ||

| √ | √ | 87.29 | 77.45 | 93.80 | 95.83 | ||

| √ | √ | 87.36 | 77.56 | 93.81 | 95.67 | ||

| √ | √ | √ | 88.54 | 79.43 | 94.39 | 96.15 | |

| √ | √ | √ | √ | 89.02 | 80.22 | 94.71 | 96.83 |

表4 各模块的消融实验结果 (%)

Tab.4 Ablation experimental results for different module

| FM | AttMSFE | MCEM | DGConv | Dice | IoU | Acc | Spe |

|---|---|---|---|---|---|---|---|

| 85.18 | 74.18 | 92.99 | 96.26 | ||||

| √ | 86.69 | 76.50 | 93.46 | 95.36 | |||

| √ | 86.00 | 75.44 | 93.29 | 96.03 | |||

| √ | 85.97 | 75.40 | 93.34 | 96.40 | |||

| √ | 86.85 | 76.76 | 93.70 | 96.38 | |||

| √ | √ | 87.13 | 77.20 | 93.83 | 96.40 | ||

| √ | √ | 87.29 | 77.45 | 93.80 | 95.83 | ||

| √ | √ | 87.36 | 77.56 | 93.81 | 95.67 | ||

| √ | √ | √ | 88.54 | 79.43 | 94.39 | 96.15 | |

| √ | √ | √ | √ | 89.02 | 80.22 | 94.71 | 96.83 |

| 方法 | Dice | IoU | Acc |

|---|---|---|---|

| BCE | 88.08 | 78.70 | 94.23 |

| Dice | 80.38 | 67.20 | 90.56 |

| Dice+BCE | 89.02 | 80.22 | 94.71 |

表5 损失函数的消融实验结果 (%)

Tab. 5 Ablation experimental results for loss functions

| 方法 | Dice | IoU | Acc |

|---|---|---|---|

| BCE | 88.08 | 78.70 | 94.23 |

| Dice | 80.38 | 67.20 | 90.56 |

| Dice+BCE | 89.02 | 80.22 | 94.71 |

| [1] | BRAY F, LAVERSANNE M, SUNG H, et al. Global cancer statistics 2022: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries[J]. CA: A Cancer Journal for Clinicians, 2024, 74(3): 229-263. |

| [2] | SIEGEL R L, MILLER K D, FUCHS H E, et al. Cancer statistics, 2021[J]. CA: A Cancer Journal for Clinicians, 2021, 71(1): 7-33. |

| [3] | 张玮智,于谦,苏金善,等. 从U-Net到Transformer:深度模型在医学图像分割中的应用综述[J]. 计算机应用, 2024, 44(S1): 204-222. |

| ZHANG W Z, YU Q, SU J S, et al. From U-Net to Transformer: a review of deep models in medical image segmentation[J]. Journal of Computer Applications, 2024, 44(S1): 204-222. | |

| [4] | XIA S, KRISHNAN S M, TJOA M P, et al. A novel methodology for extracting colon’s lumen from colonoscopic images[J]. Journal of Systemics, Cybernetics and Informatics, 2003, 1(2): 7-12. |

| [5] | MOSCHENI F, BHATTACHARJEE S, KUNT M. Spatio-temporal segmentation based on region merging[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 1998, 20(9): 897-915. |

| [6] | CELEBI M E, IYATOMI H, SCHAEFER G, et al. Lesion border detection in dermoscopy images[J]. Computerized Medical Imaging and Graphics, 2009, 33(2): 148-153. |

| [7] | SAHA A, PRASAD P, THABIT A. Leveraging adaptive color augmentation in convolutional neural networks for deep skin lesion segmentation[C]// Proceedings of the IEEE 17th International Symposium on Biomedical Imaging. Piscataway: IEEE, 2020: 2014-2017. |

| [8] | LeCUN Y, BOTTOU L, BENGIO Y, et al. Gradient-based learning applied to document recognition[J]. Proceedings of the IEEE, 1998, 86(11): 2278-2324. |

| [9] | LONG J, SHELHAMER E, DARRELL T. Fully convolutional networks for semantic segmentation[C]// Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE, 2015: 3431-3440. |

| [10] | RONNEBERGER O, FISCHER P, BROX T. U-Net: convolutional networks for biomedical image segmentation[C]// Proceedings of the 2015 Medical Image Computing and Computer-Assisted Intervention, LNCS 9351. Cham: Springer, 2015: 234-241. |

| [11] | DOSOVITSKIY A, BEYER L, KOLESNIKOV A, et al. An image is worth 16x16 words: Transformers for image recognition at scale[EB/OL]. [2024-06-14].. |

| [12] | GUO M H, LU C Z, LIU Z N, et al. Visual attention network[J]. Computational Visual Media, 2023, 9(4): 733-752. |

| [13] | KOTEI E, THIRUNAVUKARASU R. A systematic review of transformer-based pre-trained language models through self-supervised learning[J]. Information, 2023, 14(3): No.187. |

| [14] | CAO H, WANG Y, CHEN J, et al. Swin-Unet: Unet-like pure transformer for medical image segmentation[C]// Proceedings of the 2022 European Conference on Computer Vision Workshops, LNCS 13803. Cham: Springer, 2023: 205-218. |

| [15] | ZHOU Z, RAHMAN SIDDIQUEE M M, TAJBAKHSH N, et al. UNet++: a nested U-Net architecture for medical image segmentation[C]// Proceedings of the 2018 International Workshop on Deep Learning in Medical Image Analysis and the International Workshop on Multimodal Learning for Clinical Decision Support, LNCS 11045. Cham: Springer, 2018: 3-11. |

| [16] | OKTAY O, SCHLEMPER J, FOLGOC L L, et al. Attention U-Net: learning where to look for the pancreas [EB/OL]. [2024-07-24]. . |

| [17] | LIU Z, LIN Y, CAO Y, et al. Swin Transformer: hierarchical vision Transformer using shifted windows[C]// Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision. Piscataway: IEEE, 2021: 9992-10002. |

| [18] | CHEN J, LU Y, YU Q, et al. TransUNet: Transformers make strong encoders for medical image segmentation[EB/OL]. [2024-05-08].. |

| [19] | 梁礼明,彭仁杰,冯骏,等. 基于多尺度特征融合双U型皮肤病变分割算法[J]. 计算机应用研究, 2021, 38(9): 2876-2880. |

| LIANG L M, PENG R J, FENG J, et al. Skin lesion image segmentation algorithm based on multi-scale feature fusion double U-Net[J]. Application Research of Computers, 2021, 38(9): 2876-2880. | |

| [20] | RUAN J, XIANG S, XIE M, et al. MALUNet: a multi-attention and light-weight UNet for skin lesion segmentation[C]// Proceedings of the 2022 IEEE Conference on Bioinformatics and Biomedicine. Piscataway: IEEE, 2022: 1150-1156. |

| [21] | 崔少国,文浩,张宇楠,等. 带有注意力机制的OCTA视网膜血管分割方法[J]. 计算机工程与应用, 2023, 59(18): 163-171. |

| CUI S G, WEN H, ZHANG Y N, et al. Segmentation method of retinal vessels in OCTA with attention mechanism[J]. Computer Engineering and Applications, 2023, 59(18): 163-171. | |

| [22] | LE P T, PHAM B T, CHANG C C, et al. Anti-aliasing attention U-net model for skin lesion segmentation[J]. Diagnostics, 2023, 13(8): No.1460. |

| [23] | RAHMAN C M A, BHUIYAN R K, SHYAM S P, et al. Attention enabled MultiResUNet for bio-medical image segmentation[C]// Proceedings of the 6th International Conference on Electrical Engineering and Information and Communication Technology. Piscataway: IEEE, 2024: 622-627. |

| [24] | WU R, LIANG P, HUANG X, et al. MHorUNet: high-order spatial interaction UNet for skin lesion segmentation[J]. Biomedical Signal Processing and Control, 2024, 88(Pt B): No.105517. |

| [25] | 陶惜婷,叶青. 融合CNN和Transformer的并行双分支皮肤病灶图像分割[J]. 计算机应用研究, 2024, 41(8): 2554-2560. |

| TAO X T, YE Q. Parallel dual-branch image segmentation of skin lesions fusing CNN and Transformer[J]. Application Research of Computers, 2024, 41(8): 2554-2560. | |

| [26] | HOU Q, ZHANG L, CHENG M M, et al. Strip pooling: rethinking spatial pooling for scene parsing[C]// Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE, 2020: 4002-4011. |

| [27] | WOO S, PARK J, LEE J Y, et al. CBAM: convolutional block attention module[C]// Proceedings of the 2018 European Conference on Computer Vision, LNCS 11211. Cham: Springer, 2018: 3-19. |

| [28] | PENG Y, SONKA M, CHEN D Z. U-Net v2: rethinking the skip connections of U-Net for medical image segmentation[EB/OL]. [2024-05-18].. |

| [29] | CHEN Z, HE Z, LU Z M. DEA-Net: single image dehazing based on detail-enhanced convolution and content-guided attention[J]. IEEE Transactions on Image Processing, 2024, 33: 1002-1015. |

| [30] | LOSHCHILOV I, HUTTER F. Decoupled weight decay regularization[EB/OL]. [2024-07-18].. |

| [31] | WANG H, CAO P, WANG J, et al. UCTransNet: rethinking the skip connections in U-Net from a channel-wise perspective with Transformer[C]// Proceedings of the 36th AAAI Conference on Artificial Intelligence. Palo Alto: AAAI Press, 2022: 2441-2449. |

| [32] | XU Q, MA Z, NA H, et al. DCSAU-Net: a deeper and more compact split-attention U-Net for medical image segmentation[J]. Computers in Biology and Medicine, 2023, 154: No.106626. |

| [1] | 李亚男, 郭梦阳, 邓国军, 陈允峰, 任建吉, 原永亮. 基于多模态融合特征的并分支发动机寿命预测方法[J]. 《计算机应用》唯一官方网站, 2026, 46(1): 305-313. |

| [2] | 梁瑾裕, 高宏娟, 杜晓飞. 基于潜在特征增强进行解耦的三维人脸生成方法[J]. 《计算机应用》唯一官方网站, 2026, 46(1): 216-223. |

| [3] | 昝志辉, 王雅静, 李珂, 杨智翔, 杨光宇. 基于SAA-CNN-BiLSTM网络的多特征融合语音情感识别方法[J]. 《计算机应用》唯一官方网站, 2026, 46(1): 69-76. |

| [4] | 曹柠, 温昕, 郝雁嵘, 曹锐. 多域特征融合的轻量化运动想象脑电信号解码神经网络[J]. 《计算机应用》唯一官方网站, 2026, 46(1): 289-296. |

| [5] | 王丽芳, 任文婧, 郭晓东, 张荣国, 胡立华. 用于低剂量CT图像降噪的多路特征生成对抗网络[J]. 《计算机应用》唯一官方网站, 2026, 46(1): 270-279. |

| [6] | 马英杰, 覃晶滢, 赵耿, 肖靖. 面向物联网图像的深度压缩感知网络及其混沌加密保护方法[J]. 《计算机应用》唯一官方网站, 2026, 46(1): 144-151. |

| [7] | 邓伊琳, 余发江. 基于LSTM和可分离自注意力机制的伪随机数生成器[J]. 《计算机应用》唯一官方网站, 2025, 45(9): 2893-2901. |

| [8] | 吕景刚, 彭绍睿, 高硕, 周金. 复频域注意力和多尺度频域增强驱动的语音增强网络[J]. 《计算机应用》唯一官方网站, 2025, 45(9): 2957-2965. |

| [9] | 李维刚, 邵佳乐, 田志强. 基于双注意力机制和多尺度融合的点云分类与分割网络[J]. 《计算机应用》唯一官方网站, 2025, 45(9): 3003-3010. |

| [10] | 王翔, 陈志祥, 毛国君. 融合局部和全局相关性的多变量时间序列预测方法[J]. 《计算机应用》唯一官方网站, 2025, 45(9): 2806-2816. |

| [11] | 许志雄, 李波, 边小勇, 胡其仁. 对抗样本嵌入注意力U型网络的3D医学图像分割[J]. 《计算机应用》唯一官方网站, 2025, 45(9): 3011-3016. |

| [12] | 王芳, 胡静, 张睿, 范文婷. 内容引导下多角度特征融合医学图像分割网络[J]. 《计算机应用》唯一官方网站, 2025, 45(9): 3017-3025. |

| [13] | 梁一鸣, 范菁, 柴汶泽. 基于双向交叉注意力的多尺度特征融合情感分类[J]. 《计算机应用》唯一官方网站, 2025, 45(9): 2773-2782. |

| [14] | 颜承志, 陈颖, 钟凯, 高寒. 基于多尺度网络与轴向注意力的3D目标检测算法[J]. 《计算机应用》唯一官方网站, 2025, 45(8): 2537-2545. |

| [15] | 吴海峰, 陶丽青, 程玉胜. 集成特征注意力和残差连接的偏标签回归算法[J]. 《计算机应用》唯一官方网站, 2025, 45(8): 2530-2536. |

| 阅读次数 | ||||||

|

全文 |

|

|||||

|

摘要 |

|

|||||