《计算机应用》唯一官方网站 ›› 2025, Vol. 45 ›› Issue (7): 2195-2202.DOI: 10.11772/j.issn.1001-9081.2024060862

收稿日期:2024-06-25

修回日期:2024-09-14

接受日期:2024-09-18

发布日期:2025-07-10

出版日期:2025-07-10

通讯作者:

董爱华

作者简介:向尔康(2000—),男(土家族),湖南湘西人,硕士研究生,主要研究方向:图像识别、开集识别基金资助:

Erkang XIANG1, Rong HUANG1,2, Aihua DONG1,2( )

)

Received:2024-06-25

Revised:2024-09-14

Accepted:2024-09-18

Online:2025-07-10

Published:2025-07-10

Contact:

Aihua DONG

About author:XIANG Erkang, born in 2000, M. S. candidate. His research interests include image recognition, open set recognition.Supported by:摘要:

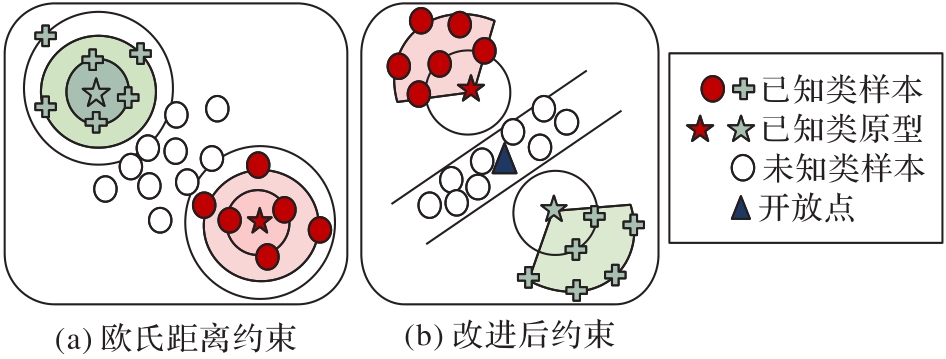

当深度神经网络(DNN)遇到训练时未遇见的类别的样本时,不能准确地拒绝未知类样本,而开集识别能在准确分类已知类样本同时拒绝未知类样本。目前在开集识别领域,原型学习方法广为应用,然而这些方法都无法同时保证样本分布内的紧凑性和样本分布间的分离性。因此,提出开放生成与特征优化的开集识别方法(OGFO)。首先,提出开放点的概念,原型点通过DNN学习对应类别样本的固有特征而开放点是各类别原型点的均值。开放点代表未知类的固有特征且占据特征空间的中心区域。特征空间中心区域为未知类样本分布的开放空间;其次,提出基于开放点的特征优化算法(FOA),从而利用开放点强迫相同类别样本内部的分布更加紧凑并且迫使不同类别样本间的分布更加分离;最后,提出基于开放点的生成方法OGAN(Open Generative Adversarial Network),并使用DNN迫使OGAN生成的未知类样本分布在开放点占据的开放空间中。实验结果表明,相较于基于对抗性反向点学习的开集识别方法(ARPL),OGFO在MNIST、SVHN、CIFAR10和TinyImageNet数据集上的AUROC(Area Under the Receiver Operating Characteristic curve)提升明显,尤其在TinyImageNet数据集上的AUROC上至少提升了3个百分点,在准确率和OSCR(Open Set Classification Rate)上分别至少提升6和5个百分点。可见,OGFO解决了其他方法无法兼顾样本分布内的紧凑性和样本分布间的分离性的问题。

中图分类号:

向尔康, 黄荣, 董爱华. 开放生成与特征优化的开集识别方法[J]. 计算机应用, 2025, 45(7): 2195-2202.

Erkang XIANG, Rong HUANG, Aihua DONG. Open set recognition method with open generation and feature optimization[J]. Journal of Computer Applications, 2025, 45(7): 2195-2202.

图4 欧氏距离约束下与基于开放点的余弦损失函数约束下的样本分布对比

Fig. 4 Comparison of sample distribution under Euclidean distance constraint and open point based cosine loss function constraint

| 数据集 | 方法 | AUROC | 准确率 | OSCR | 数据集 | 方法 | AUROC | 准确率 | OSCR |

|---|---|---|---|---|---|---|---|---|---|

| MNIST | Softmax | 97.9 | 99.5 | 99.2 | CIFAR+10 | Softmax | 81.6 | 96.3 | 90.9 |

| Openmax | 98.0 | 99.5 | — | Openmax | 81.7 | — | — | ||

| G-OpenMax | 98.8 | 99.6 | — | G-OpenMax | 83.8 | — | — | ||

| CROSR | 99.1 | 99.2 | — | CROSR | 91.2 | — | — | ||

| C2AE | 98.8 | 99.0 | 99.6 | C2AE | 95.5 | — | — | ||

| RPL | 98.8 | 99.8 | 99.4 | RPL | 84.2 | 96.5 | 91.8 | ||

| GCPL | 99.3 | 99.8 | 99.1 | GCPL | 88.1 | 96.4 | 90.9 | ||

| ARPL | 99.6 | 99.7 | 99.4 | ARPL | 96.5 | 96.4 | 93.5 | ||

| ARPL+CS | 99.7 | 99.7 | 99.5 | ARPL+CS | 97.1 | 97.1 | 94.7 | ||

| ODL | 99.5 | — | 99.4 | ODL | 89.1 | — | 92.5 | ||

| ODL+ | 99.6 | — | 99.5 | ODL+ | 91.1 | — | 93.2 | ||

| OGFO | 99.7 | 99.8 | 99.5 | OGFO | 96.8 | 97.4 | 94.2 | ||

| SVHN | Softmax | 88.5 | 94.7 | 92.8 | CIFAR+50 | Softmax | 80.5 | 96.4 | 88.5 |

| Openmax | 89.3 | 94.7 | — | Openmax | 79.6 | — | — | ||

| G-OpenMax | 90.8 | 94.8 | — | G-OpenMax | 82.7 | — | — | ||

| CROSR | 89.9 | 94.5 | — | CROSR | 90.5 | — | — | ||

| C2AE | 92.0 | 95.3 | 95.1 | C2AE | 93.7 | — | — | ||

| RPL | 93.2 | 96.9 | 93.6 | RPL | 83.2 | 96.6 | 89.6 | ||

| GCPL | 93.2 | 96.7 | 92.8 | GCPL | 87.9 | 96.4 | 88.5 | ||

| ARPL | 96.3 | 96.6 | 94.0 | ARPL | 94.3 | 96.4 | 91.6 | ||

| ARPL+CS | 96.7 | 96.7 | 94.3 | ARPL+CS | 95.1 | 97.2 | 92.9 | ||

| ODL | 94.3 | — | 93.4 | ODL | 88.3 | — | 89.8 | ||

| ODL+ | 95.4 | — | 94.1 | ODL+ | 90.6 | — | 90.3 | ||

| OGFO | 97.3 | 97.2 | 94.5 | OGFO | 94.9 | 97.5 | 93.1 | ||

| CIFAR10 | Softmax | 67.6 | 80.1 | 83.8 | TinyImageNet | Softmax | 57.7 | 73.3 | 60.8 |

| Openmax | 69.3 | 80.1 | — | Openmax | 57.6 | — | — | ||

| G-OpenMax | 69.4 | 81.6 | — | G-OpenMax | 58.6 | — | — | ||

| CROSR | 88.3 | 93.0 | — | CROSR | 58.9 | — | — | ||

| C2AE | 89.5 | 93.8 | 82.1 | C2AE | 74.8 | — | — | ||

| RPL | 82.7 | 94.6 | 85.2 | RPL | 68.8 | 62.8 | 53.2 | ||

| GCPL | 84.8 | 92.4 | 83.8 | GCPL | 63.9 | 62.3 | 59.3 | ||

| ARPL | 90.1 | 94.5 | 86.6 | ARPL | 76.2 | 76.1 | 62.3 | ||

| ARPL+CS | 90.7 | 95.4 | 87.9 | ARPL+CS | 78.2 | 79.8 | 65.9 | ||

| ODL | 85.7 | — | 84.8 | ODL | 76.4 | — | 64.3 | ||

| ODL+ | 88.5 | — | 86.9 | ODL+ | 74.6 | — | 59.2 | ||

| OGFO | 90.6 | 96.1 | 87.5 | OGFO | 79.6 | 82.7 | 67.8 |

表1 不同方法在AUROC、准确率、OSCR上的性能对比 ( %)

Tab. 1 Comparison of AUROC, accuracy and OSCR performance of different methods

| 数据集 | 方法 | AUROC | 准确率 | OSCR | 数据集 | 方法 | AUROC | 准确率 | OSCR |

|---|---|---|---|---|---|---|---|---|---|

| MNIST | Softmax | 97.9 | 99.5 | 99.2 | CIFAR+10 | Softmax | 81.6 | 96.3 | 90.9 |

| Openmax | 98.0 | 99.5 | — | Openmax | 81.7 | — | — | ||

| G-OpenMax | 98.8 | 99.6 | — | G-OpenMax | 83.8 | — | — | ||

| CROSR | 99.1 | 99.2 | — | CROSR | 91.2 | — | — | ||

| C2AE | 98.8 | 99.0 | 99.6 | C2AE | 95.5 | — | — | ||

| RPL | 98.8 | 99.8 | 99.4 | RPL | 84.2 | 96.5 | 91.8 | ||

| GCPL | 99.3 | 99.8 | 99.1 | GCPL | 88.1 | 96.4 | 90.9 | ||

| ARPL | 99.6 | 99.7 | 99.4 | ARPL | 96.5 | 96.4 | 93.5 | ||

| ARPL+CS | 99.7 | 99.7 | 99.5 | ARPL+CS | 97.1 | 97.1 | 94.7 | ||

| ODL | 99.5 | — | 99.4 | ODL | 89.1 | — | 92.5 | ||

| ODL+ | 99.6 | — | 99.5 | ODL+ | 91.1 | — | 93.2 | ||

| OGFO | 99.7 | 99.8 | 99.5 | OGFO | 96.8 | 97.4 | 94.2 | ||

| SVHN | Softmax | 88.5 | 94.7 | 92.8 | CIFAR+50 | Softmax | 80.5 | 96.4 | 88.5 |

| Openmax | 89.3 | 94.7 | — | Openmax | 79.6 | — | — | ||

| G-OpenMax | 90.8 | 94.8 | — | G-OpenMax | 82.7 | — | — | ||

| CROSR | 89.9 | 94.5 | — | CROSR | 90.5 | — | — | ||

| C2AE | 92.0 | 95.3 | 95.1 | C2AE | 93.7 | — | — | ||

| RPL | 93.2 | 96.9 | 93.6 | RPL | 83.2 | 96.6 | 89.6 | ||

| GCPL | 93.2 | 96.7 | 92.8 | GCPL | 87.9 | 96.4 | 88.5 | ||

| ARPL | 96.3 | 96.6 | 94.0 | ARPL | 94.3 | 96.4 | 91.6 | ||

| ARPL+CS | 96.7 | 96.7 | 94.3 | ARPL+CS | 95.1 | 97.2 | 92.9 | ||

| ODL | 94.3 | — | 93.4 | ODL | 88.3 | — | 89.8 | ||

| ODL+ | 95.4 | — | 94.1 | ODL+ | 90.6 | — | 90.3 | ||

| OGFO | 97.3 | 97.2 | 94.5 | OGFO | 94.9 | 97.5 | 93.1 | ||

| CIFAR10 | Softmax | 67.6 | 80.1 | 83.8 | TinyImageNet | Softmax | 57.7 | 73.3 | 60.8 |

| Openmax | 69.3 | 80.1 | — | Openmax | 57.6 | — | — | ||

| G-OpenMax | 69.4 | 81.6 | — | G-OpenMax | 58.6 | — | — | ||

| CROSR | 88.3 | 93.0 | — | CROSR | 58.9 | — | — | ||

| C2AE | 89.5 | 93.8 | 82.1 | C2AE | 74.8 | — | — | ||

| RPL | 82.7 | 94.6 | 85.2 | RPL | 68.8 | 62.8 | 53.2 | ||

| GCPL | 84.8 | 92.4 | 83.8 | GCPL | 63.9 | 62.3 | 59.3 | ||

| ARPL | 90.1 | 94.5 | 86.6 | ARPL | 76.2 | 76.1 | 62.3 | ||

| ARPL+CS | 90.7 | 95.4 | 87.9 | ARPL+CS | 78.2 | 79.8 | 65.9 | ||

| ODL | 85.7 | — | 84.8 | ODL | 76.4 | — | 64.3 | ||

| ODL+ | 88.5 | — | 86.9 | ODL+ | 74.6 | — | 59.2 | ||

| OGFO | 90.6 | 96.1 | 87.5 | OGFO | 79.6 | 82.7 | 67.8 |

| 数据集(未知类) | 方法 | AUROC | OSCR |

|---|---|---|---|

| KMNIST | Softmax | 93.8 | 96.0 |

| GCPL | 85.3 | 84.2 | |

| RPL | 97.4 | 74.3 | |

| OGFO | 98.2 | 97.2 | |

| SVHN | Softmax | 97.4 | 96.5 |

| GCPL | 98.6 | 96.9 | |

| RPL | 98.7 | 76.1 | |

| OGFO | 98.9 | 97.7 | |

| CIFAR10 | Softmax | 96.4 | 96.4 |

| GCPL | 98.1 | 96.5 | |

| RPL | 98.8 | 76.1 | |

| OGFO | 98.7 | 96.9 |

表2 以MNIST为已知类,KMNIST、SVHN和CIFAR10为未知类的域外实验结果对比 (%)

Tab. 2 Comparison of out-of-domain experimental results with MNIST as known class and KMNIST, SVHN, and CIFAR10 as unknown classes

| 数据集(未知类) | 方法 | AUROC | OSCR |

|---|---|---|---|

| KMNIST | Softmax | 93.8 | 96.0 |

| GCPL | 85.3 | 84.2 | |

| RPL | 97.4 | 74.3 | |

| OGFO | 98.2 | 97.2 | |

| SVHN | Softmax | 97.4 | 96.5 |

| GCPL | 98.6 | 96.9 | |

| RPL | 98.7 | 76.1 | |

| OGFO | 98.9 | 97.7 | |

| CIFAR10 | Softmax | 96.4 | 96.4 |

| GCPL | 98.1 | 96.5 | |

| RPL | 98.8 | 76.1 | |

| OGFO | 98.7 | 96.9 |

| 方法 | 准确率 | AUROC | OSCR |

|---|---|---|---|

| Centerloss | 99.5 | 99.5 | 99.2 |

| Centerloss+Lvec | 99.5 | 99.2 | 99.0 |

| Centerloss+Lquarter | 99.6 | 99.4 | 99.3 |

| No OGAN | 99.6 | 99.6 | 99.2 |

| OGFO | 99.8 | 99.7 | 99.5 |

表3 消融实验结果 (%)

Tab. 3 Ablation study results

| 方法 | 准确率 | AUROC | OSCR |

|---|---|---|---|

| Centerloss | 99.5 | 99.5 | 99.2 |

| Centerloss+Lvec | 99.5 | 99.2 | 99.0 |

| Centerloss+Lquarter | 99.6 | 99.4 | 99.3 |

| No OGAN | 99.6 | 99.6 | 99.2 |

| OGFO | 99.8 | 99.7 | 99.5 |

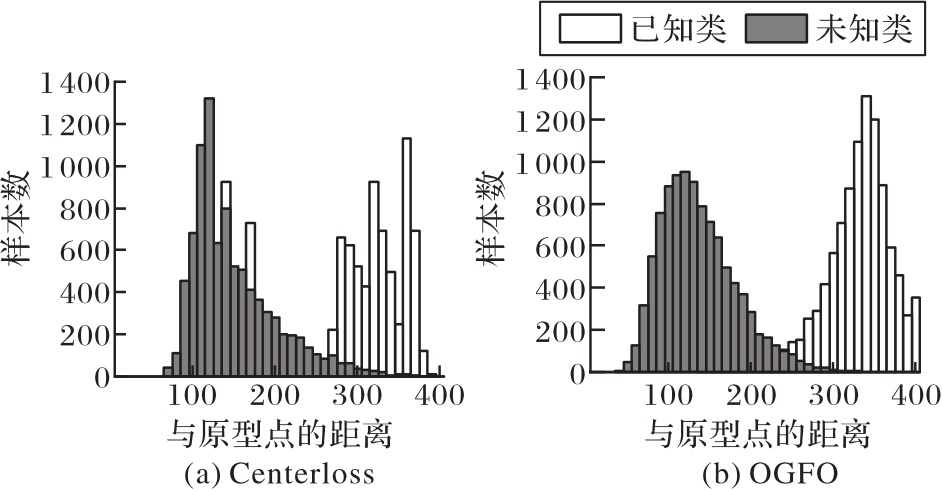

图7 Centerloss与OGFO样本与原型点间具有相同距离的样本数统计

Fig. 7 Statistics of number of samples with same distance between samples and prototype points using Centerloss and OGFO methods

| [1] | WANG J, YANG Y, MAO J, et al. CNN-RNN: a unified framework for multi-label image classification [C]// Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE, 2016: 2285-2294. |

| [2] | CHEN Z M, WEI X S, WANG P, et al. Multi-label image recognition with graph convolutional networks [C]// Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE, 2019: 5172-5181. |

| [3] | KIPF T N, WELLING M. Semi-supervised classification with graph convolutional networks [EB/OL]. [2024-04-10]. . |

| [4] | 张震,王贺,宋宏旭.基于Swin Transformer和双层路由注意力的多标签图像分类算法[J].测试技术学报,2024, 38(4): 413-419. |

| ZHANG Z, WANG H, SONG H X. Multi-label image classification algorithm based on Transformer [J]. Journal of Test and Measurement Technology, 2024, 38(4): 413-419. | |

| [5] | 王彦情,马雷,田原.光学遥感图像舰船目标检测与识别综述[J].自动化学报,2011, 37(9): 1029-1039. |

| WANG Y Q, MA L, TIAN Y. State-of-the-art of ship detection and recognition in optical remotely sensed imagery [J]. Acta Automatica Sinica, 2011, 37(9): 1029-1039. | |

| [6] | 赵其昌,吴一全,苑玉彬.光学遥感图像舰船目标检测与识别方法研究进展[J].航空学报,2024, 45(8): 51-84. |

| ZHAO Q C, WU Y Q, YUAN Y B. Progress of ship detection and recognition methods in optical remote sensing images [J]. Acta Aeronautica et Astronautica Sinica, 2024, 45(8): 51-84. | |

| [7] | 何友,熊伟,刘俊,等.海上信息感知与融合研究进展及展望[J].火力与指挥控制,2018, 43(6): 1-10. |

| HE Y, XIONG W, LIU J, et al. Review and prospect of research on maritime information perception and fusion [J]. Fire Control and Command Control, 2018, 43(6): 1-10. | |

| [8] | ITTI L, KOCH C, NIEBUR E. A model of saliency based visual attention for rapid scene analysis [J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 1998, 20(11): 1254-1259. |

| [9] | SCHEIRER W J, DE REZENDE ROCHA A, SAPKOTA A, et al. Toward open set recognition [J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2013, 35(7): 1757-1772. |

| [10] | SCHEIRER W J, JAIN L P, BOULT T E. Probability models for open set recognition [J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2014, 36(11): 2317-2324. |

| [11] | JAIN L P, SCHEIRER W J, BOULT T E. Multi-class open set recognition using probability of inclusion [C]// Proceedings of the 2014 European Conference on Computer Vision, LNCS 8691. Cham: Springer, 2014: 393-409. |

| [12] | ROZSA A, GÜNTHER M, BOULT T E, et al. Adversarial robustness: Softmax versus Openmax [C]// Proceedings of the 2017 British Machine Vision Conference. Durham: BMVA Press, 2017: No.156. |

| [13] | YANG H M, ZHANG X Y, YIN F, et al. Robust classification with convolutional prototype learning [C]// Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE, 2018: 3474-3482. |

| [14] | YANG H M, ZHANG X Y, YIN F, et al. Convolutional prototype network for open set recognition [J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2022, 44(5): 2358-2370. |

| [15] | XIA Z, WANG P, DONG G, et al. Spatial location constraint prototype loss for open set recognition [J]. Computer Vision and Image Understanding, 2023, 229: No.103651. |

| [16] | CHEN G, PENG P, WANG X, et al. Adversarial reciprocal points learning for open set recognition [J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2022, 44(11): 8065-8081. |

| [17] | ZHOU D W, YE H J, ZHAN D C. Learning placeholders for open-set recognition [C]// Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE, 2021: 4399-4408. |

| [18] | KINGMA D P, BA J L. Adam: a method for stochastic optimization [EB/OL]. [2024-08-03]. . |

| [19] | CHEN G, QIAO L, SHI Y, et al. Learning open set network with discriminative reciprocal points [C]// Proceedings of the 2020 European Conference on Computer Vision, LNCS 12348. Cham: Springer, 2020: 507-522. |

| [20] | PERERA P, MORARIU V I, JAIN R, et al. Generative-discriminative feature representations for open-set recognition [C]// Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE, 2020: 11811-11820. |

| [21] | YOSHIHASHI R, SHAO W, KAWAKAMI R, et al. Classification-reconstruction learning for open-set recognition [C]// Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE, 2019: 4011-4020. |

| [22] | OZA P, PATEL V M. C2AE: class conditioned auto-encoder for open-set recognition [C]// Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE, 2019: 2302-2311. |

| [23] | LIU Z G, FU Y M, PAN Q, et al. Orientational distribution learning with hierarchical spatial attention for open set recognition [J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2023, 45(7): 8757-8772. |

| [1] | 齐巧玲, 王啸啸, 张茜茜, 汪鹏, 董永峰. 基于元学习的标签噪声自适应学习算法[J]. 《计算机应用》唯一官方网站, 2025, 45(7): 2113-2122. |

| [2] | 王慧斌, 胡展傲, 胡节, 徐袁伟, 文博. 基于分段注意力机制的时间序列预测模型[J]. 《计算机应用》唯一官方网站, 2025, 45(7): 2262-2268. |

| [3] | 陈路, 王怀瑶, 刘京阳, 闫涛, 陈斌. 融合空间-傅里叶域信息的机器人低光环境抓取检测[J]. 《计算机应用》唯一官方网站, 2025, 45(5): 1686-1693. |

| [4] | 王华华, 范子健, 刘泽. 基于多空间概率增强的图像对抗样本生成方法[J]. 《计算机应用》唯一官方网站, 2025, 45(3): 883-890. |

| [5] | 杨晟, 李岩. 面向目标检测的对比知识蒸馏方法[J]. 《计算机应用》唯一官方网站, 2025, 45(2): 354-361. |

| [6] | 杨本臣, 李浩然, 金海波. 级联融合与增强重建的多聚焦图像融合网络[J]. 《计算机应用》唯一官方网站, 2025, 45(2): 594-600. |

| [7] | 石锐, 李勇, 朱延晗. 基于特征梯度均值化的调制信号对抗样本攻击算法[J]. 《计算机应用》唯一官方网站, 2024, 44(8): 2521-2527. |

| [8] | 王美, 苏雪松, 刘佳, 殷若南, 黄珊. 时频域多尺度交叉注意力融合的时间序列分类方法[J]. 《计算机应用》唯一官方网站, 2024, 44(6): 1842-1847. |

| [9] | 肖斌, 杨模, 汪敏, 秦光源, 李欢. 独立性视角下的相频融合领域泛化方法[J]. 《计算机应用》唯一官方网站, 2024, 44(4): 1002-1009. |

| [10] | 颜梦玫, 杨冬平. 深度神经网络平均场理论综述[J]. 《计算机应用》唯一官方网站, 2024, 44(2): 331-343. |

| [11] | 柴汶泽, 范菁, 孙书魁, 梁一鸣, 刘竟锋. 深度度量学习综述[J]. 《计算机应用》唯一官方网站, 2024, 44(10): 2995-3010. |

| [12] | 赵旭剑, 李杭霖. 基于混合机制的深度神经网络压缩算法[J]. 《计算机应用》唯一官方网站, 2023, 43(9): 2686-2691. |

| [13] | 申云飞, 申飞, 李芳, 张俊. 基于张量虚拟机的深度神经网络模型加速方法[J]. 《计算机应用》唯一官方网站, 2023, 43(9): 2836-2844. |

| [14] | 李校林, 杨松佳. 基于深度学习的多用户毫米波中继网络混合波束赋形[J]. 《计算机应用》唯一官方网站, 2023, 43(8): 2511-2516. |

| [15] | 李淦, 牛洺第, 陈路, 杨静, 闫涛, 陈斌. 融合视觉特征增强机制的机器人弱光环境抓取检测[J]. 《计算机应用》唯一官方网站, 2023, 43(8): 2564-2571. |

| 阅读次数 | ||||||

|

全文 |

|

|||||

|

摘要 |

|

|||||